搜索到

54

篇与

运维

的结果

-

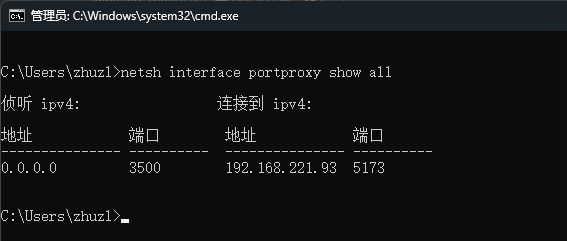

WSL2环境端口映射 背景介绍近期在开展 ClaudeCodeUI 二开相关的工作,在本地 Windows11 环境折腾一圈后,发现项目依赖环境依然没搞好的情况下,便转战到 WSL 环境进行开发,经过一段时间的开发工作,逐渐适应。在对接 Ora Hydra 实现用户单点登录时,本地开发环境需使用localhost访问,否则需使用https协议进行对接,所以就想着将WSL环境中的端口映射到本地环境,然后进行对接。常规来讲无所不能的 Nginx 肯定是OK的,但是WSL环境对外做端口映射应该是非常普遍的需求,有没有更轻量的解决方案呢?解决方案经过对官网文档的快速浏览,还真发现了有关wsl 环境做本地端口映射的说明:https://learn.microsoft.com/en-us/windows/wsl/networking#accessing-a-wsl-2-distribution-from-your-local-area-network-lan中文版链接:https://learn.microsoft.com/zh-cn/windows/wsl/networking#accessing-a-wsl-2-distribution-from-your-local-area-network-lan经过对文档简单的梳理,可以使用类似下面的命令添加端口映射:netsh interface portproxy add v4tov4 listenport=3500 listenaddress=0.0.0.0 connectport=5173 connectaddress=192.168.221.93其中:listenport = 对应本机端口connectport = WSL环境的端口listenaddress = 本地监听地址,使用 listenaddress=0.0.0.0 将侦听所有 IPv4 端口connectaddress = WSL环境的IP地址,可以使用wsl hostname -I获取扩展阅读netsh 网络命令行工具官网文档:https://learn.microsoft.com/zh-cn/windows-server/administration/windows-commands/netsh查看代理列表netsh interface portproxy show all删除端口代理netsh interface portproxy delete v4tov4 listenport=3500 listenaddress=0.0.0.0

WSL2环境端口映射 背景介绍近期在开展 ClaudeCodeUI 二开相关的工作,在本地 Windows11 环境折腾一圈后,发现项目依赖环境依然没搞好的情况下,便转战到 WSL 环境进行开发,经过一段时间的开发工作,逐渐适应。在对接 Ora Hydra 实现用户单点登录时,本地开发环境需使用localhost访问,否则需使用https协议进行对接,所以就想着将WSL环境中的端口映射到本地环境,然后进行对接。常规来讲无所不能的 Nginx 肯定是OK的,但是WSL环境对外做端口映射应该是非常普遍的需求,有没有更轻量的解决方案呢?解决方案经过对官网文档的快速浏览,还真发现了有关wsl 环境做本地端口映射的说明:https://learn.microsoft.com/en-us/windows/wsl/networking#accessing-a-wsl-2-distribution-from-your-local-area-network-lan中文版链接:https://learn.microsoft.com/zh-cn/windows/wsl/networking#accessing-a-wsl-2-distribution-from-your-local-area-network-lan经过对文档简单的梳理,可以使用类似下面的命令添加端口映射:netsh interface portproxy add v4tov4 listenport=3500 listenaddress=0.0.0.0 connectport=5173 connectaddress=192.168.221.93其中:listenport = 对应本机端口connectport = WSL环境的端口listenaddress = 本地监听地址,使用 listenaddress=0.0.0.0 将侦听所有 IPv4 端口connectaddress = WSL环境的IP地址,可以使用wsl hostname -I获取扩展阅读netsh 网络命令行工具官网文档:https://learn.microsoft.com/zh-cn/windows-server/administration/windows-commands/netsh查看代理列表netsh interface portproxy show all删除端口代理netsh interface portproxy delete v4tov4 listenport=3500 listenaddress=0.0.0.0 -

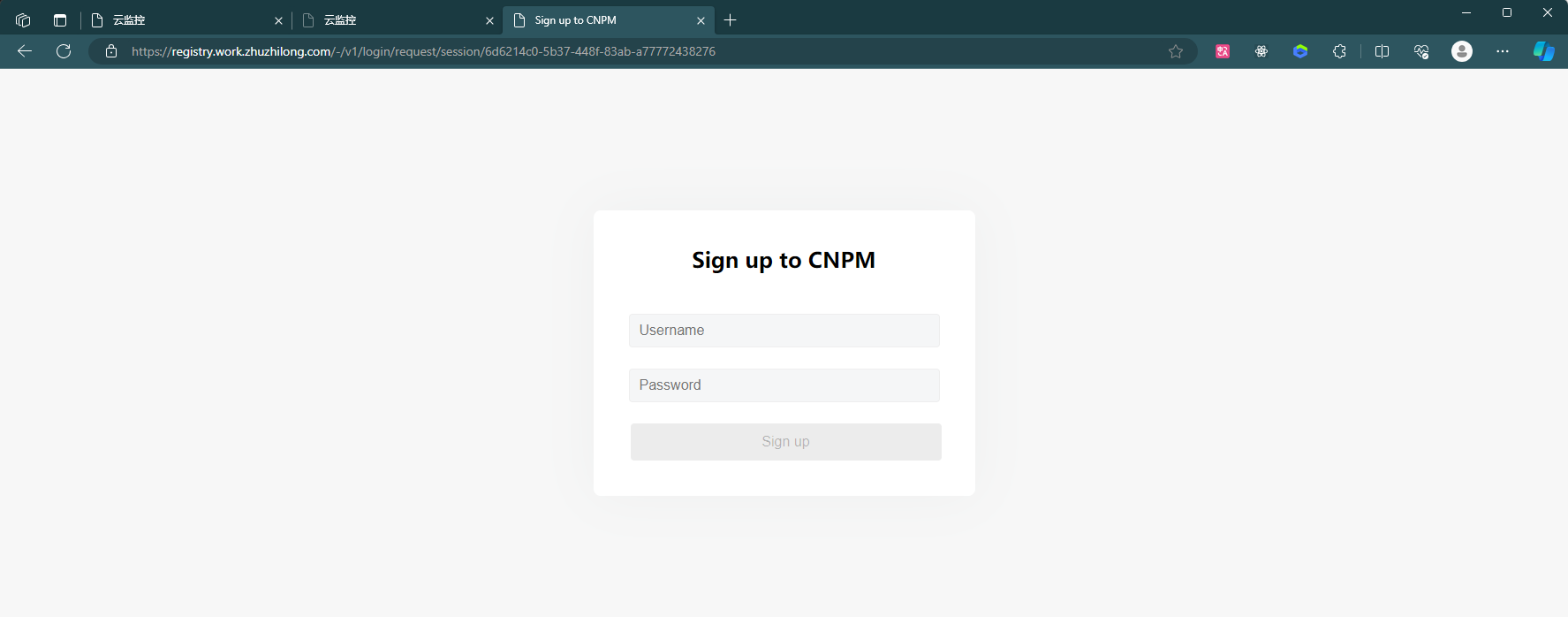

使用 cnpmcore 部署 npm 私服 近期有一些内部项目需要用到 npm 私服服务,经过一些简单了解后,发现日常使用的淘宝国内镜像(https://npmmirror.com)就是使用开源的 cnpmcore 搭建的,部署部署前可详细查看 cnpmcore 工程( https://github.com/cnpm/cnpmcore )docs目录下的 deploy-in-docker.md 文件。本工程使用 docker compose 进行部署,部署前需要提前准备好如下环境:数据库服务:MySQL 或 PostgreSQL使用工程db目录中的sql文件初始化表结构数据缓存服务:Redis文件存储服务:目前支持的文件存储服务有阿里云 OSS、AWS S3,以及兼容 S3 的 minio。本案例使用 MinIOdocker-compose.yaml 文件内容如下:services: cnpmcore: image: fengmk2/cnpmcore:latest-alpine container_name: cnpmcore restart: unless-stopped volumes: #- ./config.prod.js:/usr/src/app/config/config.prod.js environment: - TZ=Asia/Shanghai - CNPMCORE_CONFIG_REGISTRY=https://registryx.work.zhuzhilong.com - CNPMCORE_CONFIG_SOURCE_REGISTRY=https://registry.npmmirror.com - CNPMCORE_CONFIG_SOURCE_REGISTRY_IS_CNPM=true - CNPMCORE_DATABASE_TYPE=MySQL - CNPMCORE_DATABASE_NAME=cnpmcore - CNPMCORE_DATABASE_HOST=local.work.zhuzhilong.com - CNPMCORE_DATABASE_PORT=3306 - CNPMCORE_DATABASE_USER=zhuzl - CNPMCORE_DATABASE_PASSWORD=20xxxxxxxx - CNPMCORE_NFS_TYPE=s3 - CNPMCORE_NFS_S3_CLIENT_ENDPOINT=http://minio-api.work.zhuzhilong.com - CNPMCORE_NFS_S3_CLIENT_REGION=cs - CNPMCORE_NFS_S3_CLIENT_BUCKET=cnpmcore - CNPMCORE_NFS_S3_CLIENT_ID=gfUiTxxxxxxxxlThJ6H - CNPMCORE_NFS_S3_CLIENT_SECRET=LgpUK32qfxxxxxxxxplyH4XVoB - CNPMCORE_NFS_S3_CLIENT_FORCE_PATH_STYLE=true - CNPMCORE_NFS_S3_CLIENT_DISABLE_URL=true - CNPMCORE_REDIS_HOST=local.work.zhuzhilong.com - CNPMCORE_REDIS_PORT=6379 - CNPMCORE_REDIS_PASSWORD=88888888 - CNPMCORE_REDIS_DB=1 networks: - net-zzl ports: - 8140:7001 networks: net-zzl: name: bridge_zzl external: true使用 docker compose up -d 命令启动后,使用docker logs -f cnpmcore命令查看启动日志,如果相关连接没问题的话,显示如下所示的示例内容即表示启动成功:2025-05-08 15:35:11,803 INFO 59 [@eggjs/core/lifecycle:ready_stat] end ready task /usr/src/app/node_modules/@eggjs/tegg-orm-plugin/app.js:didLoad, remain [] 2025-05-08 15:35:11,811 INFO 34 [master] app_worker#4:59 started at 7001, remain 0 (5825ms) 2025-05-08 15:35:11,811 INFO 34 [master] egg started on http://127.0.0.1:7001 (7275ms)生成管理员账号执行如下命令创建用户:npm adduser --registry=https://registry.work.zhuzhilong.com执行后展示如下所示内容:C:\Users\zhuzl>npm adduser --registry=https://registry.work.zhuzhilong.com npm notice Log in on https://registry.work.zhuzhilong.com/ Create your account at: https://registry.work.zhuzhilong.com/-/v1/login/request/session/6d6214c0-5b37-448f-83ab-a77772438276 Press ENTER to open in the browser...根据提示,打开注册连接,如下图所示:如果没有开放注册功能的话,貌似仅可注册名为 cnpmcore_admin 的账号,其他账号名均提示Public registration is not allowed注册成功后可使用如下命令登录:npm login --registry=https://registry.work.zhuzhilong.com登录成功后可以使用如下命令查看登录的用户:npm whoami --registry=https://registry.work.zhuzhilong.com执行后显示如下:发布npm包到私服打包后,可以使用如下命令将打包后的文件发布到我们部署的npm 私服:npm publish --registry=https://registry.work.zhuzhilong.com一般发布前,我们需要指定发布哪些文件,可以通过 package.json 的 files 指定,示例package.json 完整内容如下:{ "name": "cms", "version": "0.1.1", "private": false, "files": [ "dist" ], "main": "dist/cms.js", "module": "dist/cms.mjs", "exports": { ".": { "import": "./dist/cms.mjs", "require": "./dist/cms.js" }, "./style.less": "./dist/style.less" }, "repository": { "type": "git", "url": "git@git.paratera.net:aicloud/frontend/cms.git" }, "scripts": { "dev": "vite", "dev:blsc": "vite --mode blsc", "build": "vite build", "preview": "vite preview", "build:lib": "vite build --config vite.lib.config.mjs" }, "dependencies": { "@ant-design/compatible": "^5.1.1", "@ant-design/pro-layout": "7.21.2", "@ant-design/icons": "^4.0.0", "@babel/plugin-transform-react-jsx": "^7.25.9", "@reduxjs/toolkit": "^2.5.0", "antd": "^5.11.0", "axios": "^1.8.4", "big.js": "^6.2.2", "dayjs": "^1.11.13", "echarts": "^5.6.0", "echarts-for-react": "^3.0.2", "history": "^5.3.0", "js-cookie": "^2.2.1", "lodash-es": "^4.17.21", "prop-types": "^15.8.1", "qs": "^6.13.0", "react": "^18.2.0", "react-copy-to-clipboard": "^5.1.0", "react-dom": "^18.2.0", "react-redux": "^9.0.0", "react-router-dom": "^6.20.0", "redux-logger": "^3.0.6", "remixicon": "^4.6.0", "spark-md5": "^3.0.2" }, "devDependencies": { "@vitejs/plugin-legacy": "^5.2.0", "@vitejs/plugin-react": "^4.2.0", "autoprefixer": "^10.4.16", "dotenv": "^16.3.1", "less": "^4.2.0", "less-loader": "7", "msw": "^2.0.0", "postcss": "^8.4.31", "postcss-preset-env": "^9.3.0", "vite": "^5.0.10", "vite-plugin-html": "^3.2.0", "vite-plugin-node-polyfills": "^0.19.0" } }一下是使用 npm publish 命令成功发布到私服的过程发布成功后,我们可以在数据库中看到相关的包及版本信息:在 MinIO 中也可以看到相关的文件:相关链接cnpm github 开源地址:https://github.com/cnpm/cnpmcore

使用 cnpmcore 部署 npm 私服 近期有一些内部项目需要用到 npm 私服服务,经过一些简单了解后,发现日常使用的淘宝国内镜像(https://npmmirror.com)就是使用开源的 cnpmcore 搭建的,部署部署前可详细查看 cnpmcore 工程( https://github.com/cnpm/cnpmcore )docs目录下的 deploy-in-docker.md 文件。本工程使用 docker compose 进行部署,部署前需要提前准备好如下环境:数据库服务:MySQL 或 PostgreSQL使用工程db目录中的sql文件初始化表结构数据缓存服务:Redis文件存储服务:目前支持的文件存储服务有阿里云 OSS、AWS S3,以及兼容 S3 的 minio。本案例使用 MinIOdocker-compose.yaml 文件内容如下:services: cnpmcore: image: fengmk2/cnpmcore:latest-alpine container_name: cnpmcore restart: unless-stopped volumes: #- ./config.prod.js:/usr/src/app/config/config.prod.js environment: - TZ=Asia/Shanghai - CNPMCORE_CONFIG_REGISTRY=https://registryx.work.zhuzhilong.com - CNPMCORE_CONFIG_SOURCE_REGISTRY=https://registry.npmmirror.com - CNPMCORE_CONFIG_SOURCE_REGISTRY_IS_CNPM=true - CNPMCORE_DATABASE_TYPE=MySQL - CNPMCORE_DATABASE_NAME=cnpmcore - CNPMCORE_DATABASE_HOST=local.work.zhuzhilong.com - CNPMCORE_DATABASE_PORT=3306 - CNPMCORE_DATABASE_USER=zhuzl - CNPMCORE_DATABASE_PASSWORD=20xxxxxxxx - CNPMCORE_NFS_TYPE=s3 - CNPMCORE_NFS_S3_CLIENT_ENDPOINT=http://minio-api.work.zhuzhilong.com - CNPMCORE_NFS_S3_CLIENT_REGION=cs - CNPMCORE_NFS_S3_CLIENT_BUCKET=cnpmcore - CNPMCORE_NFS_S3_CLIENT_ID=gfUiTxxxxxxxxlThJ6H - CNPMCORE_NFS_S3_CLIENT_SECRET=LgpUK32qfxxxxxxxxplyH4XVoB - CNPMCORE_NFS_S3_CLIENT_FORCE_PATH_STYLE=true - CNPMCORE_NFS_S3_CLIENT_DISABLE_URL=true - CNPMCORE_REDIS_HOST=local.work.zhuzhilong.com - CNPMCORE_REDIS_PORT=6379 - CNPMCORE_REDIS_PASSWORD=88888888 - CNPMCORE_REDIS_DB=1 networks: - net-zzl ports: - 8140:7001 networks: net-zzl: name: bridge_zzl external: true使用 docker compose up -d 命令启动后,使用docker logs -f cnpmcore命令查看启动日志,如果相关连接没问题的话,显示如下所示的示例内容即表示启动成功:2025-05-08 15:35:11,803 INFO 59 [@eggjs/core/lifecycle:ready_stat] end ready task /usr/src/app/node_modules/@eggjs/tegg-orm-plugin/app.js:didLoad, remain [] 2025-05-08 15:35:11,811 INFO 34 [master] app_worker#4:59 started at 7001, remain 0 (5825ms) 2025-05-08 15:35:11,811 INFO 34 [master] egg started on http://127.0.0.1:7001 (7275ms)生成管理员账号执行如下命令创建用户:npm adduser --registry=https://registry.work.zhuzhilong.com执行后展示如下所示内容:C:\Users\zhuzl>npm adduser --registry=https://registry.work.zhuzhilong.com npm notice Log in on https://registry.work.zhuzhilong.com/ Create your account at: https://registry.work.zhuzhilong.com/-/v1/login/request/session/6d6214c0-5b37-448f-83ab-a77772438276 Press ENTER to open in the browser...根据提示,打开注册连接,如下图所示:如果没有开放注册功能的话,貌似仅可注册名为 cnpmcore_admin 的账号,其他账号名均提示Public registration is not allowed注册成功后可使用如下命令登录:npm login --registry=https://registry.work.zhuzhilong.com登录成功后可以使用如下命令查看登录的用户:npm whoami --registry=https://registry.work.zhuzhilong.com执行后显示如下:发布npm包到私服打包后,可以使用如下命令将打包后的文件发布到我们部署的npm 私服:npm publish --registry=https://registry.work.zhuzhilong.com一般发布前,我们需要指定发布哪些文件,可以通过 package.json 的 files 指定,示例package.json 完整内容如下:{ "name": "cms", "version": "0.1.1", "private": false, "files": [ "dist" ], "main": "dist/cms.js", "module": "dist/cms.mjs", "exports": { ".": { "import": "./dist/cms.mjs", "require": "./dist/cms.js" }, "./style.less": "./dist/style.less" }, "repository": { "type": "git", "url": "git@git.paratera.net:aicloud/frontend/cms.git" }, "scripts": { "dev": "vite", "dev:blsc": "vite --mode blsc", "build": "vite build", "preview": "vite preview", "build:lib": "vite build --config vite.lib.config.mjs" }, "dependencies": { "@ant-design/compatible": "^5.1.1", "@ant-design/pro-layout": "7.21.2", "@ant-design/icons": "^4.0.0", "@babel/plugin-transform-react-jsx": "^7.25.9", "@reduxjs/toolkit": "^2.5.0", "antd": "^5.11.0", "axios": "^1.8.4", "big.js": "^6.2.2", "dayjs": "^1.11.13", "echarts": "^5.6.0", "echarts-for-react": "^3.0.2", "history": "^5.3.0", "js-cookie": "^2.2.1", "lodash-es": "^4.17.21", "prop-types": "^15.8.1", "qs": "^6.13.0", "react": "^18.2.0", "react-copy-to-clipboard": "^5.1.0", "react-dom": "^18.2.0", "react-redux": "^9.0.0", "react-router-dom": "^6.20.0", "redux-logger": "^3.0.6", "remixicon": "^4.6.0", "spark-md5": "^3.0.2" }, "devDependencies": { "@vitejs/plugin-legacy": "^5.2.0", "@vitejs/plugin-react": "^4.2.0", "autoprefixer": "^10.4.16", "dotenv": "^16.3.1", "less": "^4.2.0", "less-loader": "7", "msw": "^2.0.0", "postcss": "^8.4.31", "postcss-preset-env": "^9.3.0", "vite": "^5.0.10", "vite-plugin-html": "^3.2.0", "vite-plugin-node-polyfills": "^0.19.0" } }一下是使用 npm publish 命令成功发布到私服的过程发布成功后,我们可以在数据库中看到相关的包及版本信息:在 MinIO 中也可以看到相关的文件:相关链接cnpm github 开源地址:https://github.com/cnpm/cnpmcore -

常见文件共享访问协议 这里简单聊一下常见的文件共享协议 —— NFS、FTP、SMB、WebDAV,它们的优缺点以及各自的适用场景。由于归纳整理的内容可能篇幅较长,若你希望快速了解重点,可以直接查看最后的总结部分。NFSNFS(Network File System)即网络文件系统,主要用于在 Unix/Linux 系统之间实现文件共享。优点高效性:在 Unix/Linux 系统之间共享文件时,性能较高,尤其在大规模环境中。透明性:用户可以像访问本地文件系统一样访问远程文件,操作方便。稳定性:经过长时间的发展和实践检验,在合适的环境下较为稳定。缺点平台局限性:主要适用于 Unix/Linux 系统,在其他操作系统上的支持相对较弱。配置复杂:对于不熟悉 Unix/Linux 系统的用户来说,配置和管理可能较为困难。安全性问题:安全性的实现较为复杂,需要仔细配置以防止未经授权的访问。适用场景适用于大型企业或科研机构的服务器环境,特别是在 Unix/Linux 系统为主的网络中。对于需要高性能文件共享的场景,如大数据处理、高性能计算等非常适用。FTPFTP(File Transfer Protocol)是文件传输协议,用于在不同的计算机之间传输文件。优点广泛支持:几乎所有操作系统都支持 FTP 协议,通用性强。简单易用:对于基本的文件上传和下载操作,用户容易掌握。成熟稳定:是一种较为古老但非常成熟的文件传输方式。缺点安全性较低:传输过程中可能存在数据被窃取的风险,尤其是在使用明文传输时。不适合实时访问:主要用于文件的上传和下载,不适合对文件进行实时的读写操作。传输模式单一:相对其他协议,在文件管理和协作方面功能较为有限。适用场景非常适合网站管理员上传和下载文件至服务器。可用于向公众提供文件下载服务,例如软件分发、文档共享等场景。SMBSMB(Server Message Block)主要用于在 Windows 系统之间以及 Windows 与其他操作系统之间共享文件和打印机等资源。优点易于使用:在 Windows 系统中集成度高,用户无需复杂的配置即可实现文件和打印机共享。- 功能丰富:支持用户认证和权限管理,可以精细地控制资源访问。跨平台性较好:虽然主要用于 Windows 系统,但也有一些其他操作系统的实现。缺点性能问题:在大规模网络环境中,可能会出现性能瓶颈。安全风险:如果配置不当,可能会存在安全漏洞,被攻击者利用。兼容性问题:不同版本的 SMB 协议可能存在兼容性问题。适用场景在办公室环境中,是多台 Windows 电脑之间共享文件和打印机的首选。家庭网络中,也能方便不同设备之间共享文件和媒体资源。WebDavWebDav(Web-based Distributed Authoring and Versioning)是基于 HTTP 协议的扩展,用于在网络上进行文件管理和协作。优点跨平台性强:可以通过标准的 Web 浏览器进行文件操作,几乎适用于所有操作系统和设备。- 支持协作:适合多人在线协作编辑和管理文件,具有版本控制等功能。安全性较高:可以通过 HTTPS 进行加密传输,保障数据安全。缺点依赖网络:对网络连接的稳定性要求较高,网络不稳定时可能影响使用体验。性能受限:与本地文件系统相比,性能可能会有所下降。配置复杂:对于一些高级功能的配置可能需要一定的技术知识。适用场景在线文档协作平台中,方便多人同时编辑和管理文件。远程办公场景下,用户可以通过网络便捷地访问和管理文件。总结个人首推还是 SMB 和 WebDav,内部局域网用 SMB 虽然性能和稳定性会差一点,但对各类系统平台兼容性都很不错;外网访问的话用 WebDav,它本身就是基于 HTTP 协议的扩展,外网访问也只需要更改外部访问的端口即可,同时有些会适配网页端可以在浏览器上直接浏览,比较方便快捷。

常见文件共享访问协议 这里简单聊一下常见的文件共享协议 —— NFS、FTP、SMB、WebDAV,它们的优缺点以及各自的适用场景。由于归纳整理的内容可能篇幅较长,若你希望快速了解重点,可以直接查看最后的总结部分。NFSNFS(Network File System)即网络文件系统,主要用于在 Unix/Linux 系统之间实现文件共享。优点高效性:在 Unix/Linux 系统之间共享文件时,性能较高,尤其在大规模环境中。透明性:用户可以像访问本地文件系统一样访问远程文件,操作方便。稳定性:经过长时间的发展和实践检验,在合适的环境下较为稳定。缺点平台局限性:主要适用于 Unix/Linux 系统,在其他操作系统上的支持相对较弱。配置复杂:对于不熟悉 Unix/Linux 系统的用户来说,配置和管理可能较为困难。安全性问题:安全性的实现较为复杂,需要仔细配置以防止未经授权的访问。适用场景适用于大型企业或科研机构的服务器环境,特别是在 Unix/Linux 系统为主的网络中。对于需要高性能文件共享的场景,如大数据处理、高性能计算等非常适用。FTPFTP(File Transfer Protocol)是文件传输协议,用于在不同的计算机之间传输文件。优点广泛支持:几乎所有操作系统都支持 FTP 协议,通用性强。简单易用:对于基本的文件上传和下载操作,用户容易掌握。成熟稳定:是一种较为古老但非常成熟的文件传输方式。缺点安全性较低:传输过程中可能存在数据被窃取的风险,尤其是在使用明文传输时。不适合实时访问:主要用于文件的上传和下载,不适合对文件进行实时的读写操作。传输模式单一:相对其他协议,在文件管理和协作方面功能较为有限。适用场景非常适合网站管理员上传和下载文件至服务器。可用于向公众提供文件下载服务,例如软件分发、文档共享等场景。SMBSMB(Server Message Block)主要用于在 Windows 系统之间以及 Windows 与其他操作系统之间共享文件和打印机等资源。优点易于使用:在 Windows 系统中集成度高,用户无需复杂的配置即可实现文件和打印机共享。- 功能丰富:支持用户认证和权限管理,可以精细地控制资源访问。跨平台性较好:虽然主要用于 Windows 系统,但也有一些其他操作系统的实现。缺点性能问题:在大规模网络环境中,可能会出现性能瓶颈。安全风险:如果配置不当,可能会存在安全漏洞,被攻击者利用。兼容性问题:不同版本的 SMB 协议可能存在兼容性问题。适用场景在办公室环境中,是多台 Windows 电脑之间共享文件和打印机的首选。家庭网络中,也能方便不同设备之间共享文件和媒体资源。WebDavWebDav(Web-based Distributed Authoring and Versioning)是基于 HTTP 协议的扩展,用于在网络上进行文件管理和协作。优点跨平台性强:可以通过标准的 Web 浏览器进行文件操作,几乎适用于所有操作系统和设备。- 支持协作:适合多人在线协作编辑和管理文件,具有版本控制等功能。安全性较高:可以通过 HTTPS 进行加密传输,保障数据安全。缺点依赖网络:对网络连接的稳定性要求较高,网络不稳定时可能影响使用体验。性能受限:与本地文件系统相比,性能可能会有所下降。配置复杂:对于一些高级功能的配置可能需要一定的技术知识。适用场景在线文档协作平台中,方便多人同时编辑和管理文件。远程办公场景下,用户可以通过网络便捷地访问和管理文件。总结个人首推还是 SMB 和 WebDav,内部局域网用 SMB 虽然性能和稳定性会差一点,但对各类系统平台兼容性都很不错;外网访问的话用 WebDav,它本身就是基于 HTTP 协议的扩展,外网访问也只需要更改外部访问的端口即可,同时有些会适配网页端可以在浏览器上直接浏览,比较方便快捷。 -

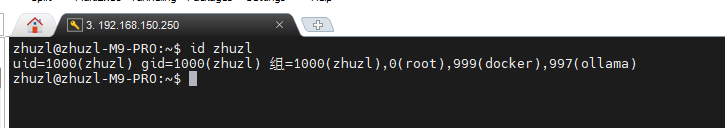

PUID 和 PGID 有些 Docker 容器需要配置 PUID 和 PGID ,以保证 Docker 容器有适当的权限来访问宿主机上的文件{alert type="info"}在部署 Docker 配置时,UID 和 GID 一定要根据实际情况进行配置,不要上来无脑就填 root 或者 nobody 的权限,这样很有可能会出现各种权限问题。比如:部署的应用不能正常工作,配合联动的应用文件没办法修改,SMB 的文件没有权限读写等等。{/alert}PUID 和 PGID 是什么PUID(用户标识符)是用于唯一标识用户的数字编号,常见于多用户系统和容器环境中以确保用户权限和文件访问的一致性。PGID(组标识符)是用于唯一标识用户组的数字编号,用于方便管理一组用户的权限和访问控制,在各类共享资源管理场景中广泛应用。其中,在部署 Docker 应用时,PUID 和 PGID 也有可能被命名为 UID 和 GID。PUID 和 PGID 有什么用PUID 和 PGID 用于指定用户和组的身份信息,以确保在不同的运行环境中,对文件和目录的访问权限能够保持一致和正确。通过设置合适的 PUID 和 PGID,可以实现容器内的用户与主机系统或其他容器之间的权限协调和数据隔离。PUID 和 PGID 怎么获取可以使用命令行工具来查看当前用户的 UID 和 GID,在终端中输入 id 用户名 就会显示对应用户的详细信息:id zhuzl这样就可以看到用户(zhuzl)的 UID 和 GIDPUID 和 PGID 怎么使用首先不一定所有的 Docker 容器应用这两个参数需要填写的,只有明确说明有才需要填写。以 Emby 为例,这里就需要用到 UID 和 GID以 EasyImage 为例,这里就需要用到 PUID 和 PGID

PUID 和 PGID 有些 Docker 容器需要配置 PUID 和 PGID ,以保证 Docker 容器有适当的权限来访问宿主机上的文件{alert type="info"}在部署 Docker 配置时,UID 和 GID 一定要根据实际情况进行配置,不要上来无脑就填 root 或者 nobody 的权限,这样很有可能会出现各种权限问题。比如:部署的应用不能正常工作,配合联动的应用文件没办法修改,SMB 的文件没有权限读写等等。{/alert}PUID 和 PGID 是什么PUID(用户标识符)是用于唯一标识用户的数字编号,常见于多用户系统和容器环境中以确保用户权限和文件访问的一致性。PGID(组标识符)是用于唯一标识用户组的数字编号,用于方便管理一组用户的权限和访问控制,在各类共享资源管理场景中广泛应用。其中,在部署 Docker 应用时,PUID 和 PGID 也有可能被命名为 UID 和 GID。PUID 和 PGID 有什么用PUID 和 PGID 用于指定用户和组的身份信息,以确保在不同的运行环境中,对文件和目录的访问权限能够保持一致和正确。通过设置合适的 PUID 和 PGID,可以实现容器内的用户与主机系统或其他容器之间的权限协调和数据隔离。PUID 和 PGID 怎么获取可以使用命令行工具来查看当前用户的 UID 和 GID,在终端中输入 id 用户名 就会显示对应用户的详细信息:id zhuzl这样就可以看到用户(zhuzl)的 UID 和 GIDPUID 和 PGID 怎么使用首先不一定所有的 Docker 容器应用这两个参数需要填写的,只有明确说明有才需要填写。以 Emby 为例,这里就需要用到 UID 和 GID以 EasyImage 为例,这里就需要用到 PUID 和 PGID -

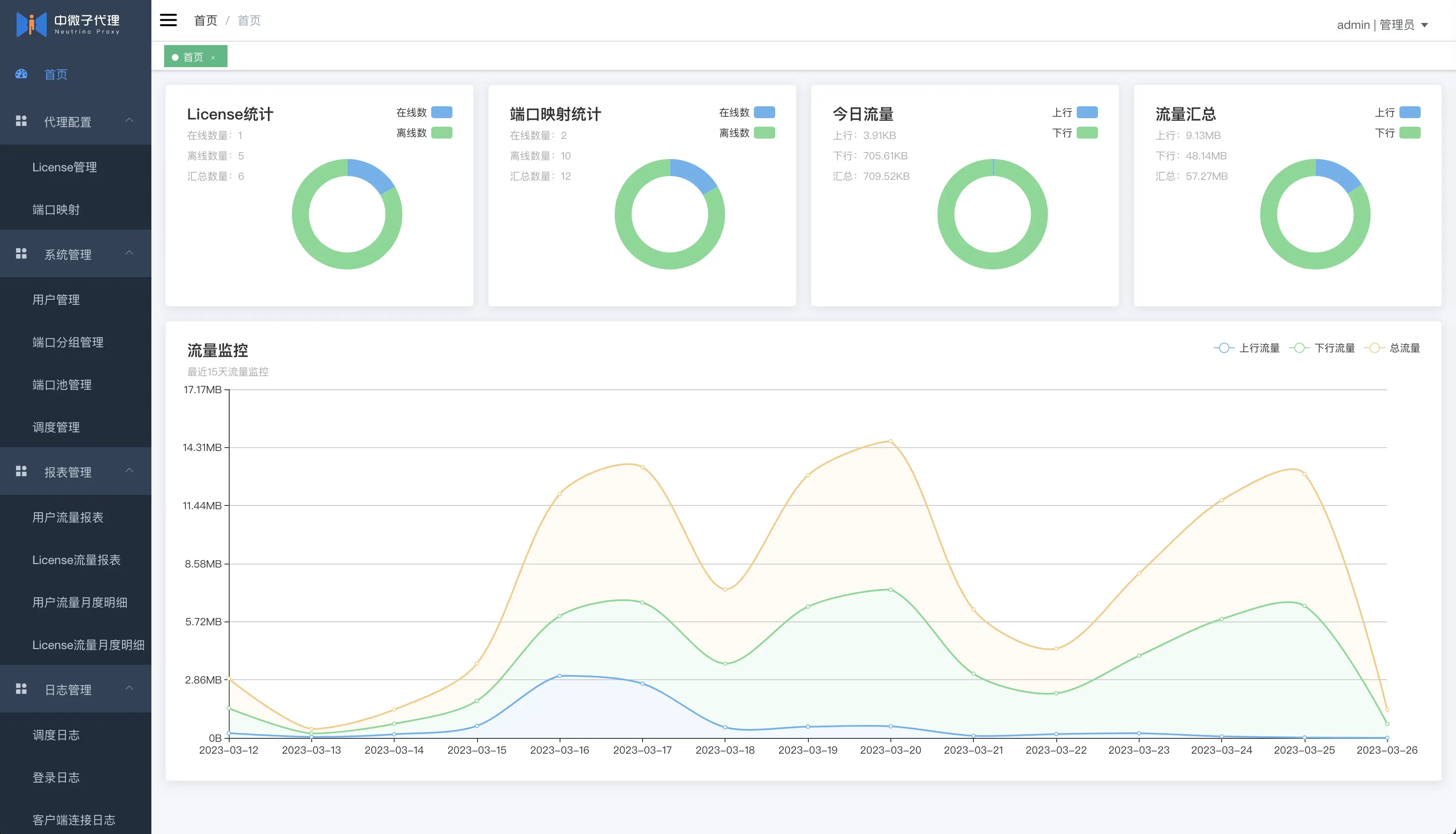

FRP升级记录 背景介绍FRP 是一款非常优秀的内网穿透和反向代理软件,它允许用户通过公网访问内网资源,无需复杂的网络配置,极大地提高了工作效率。程序员的电脑基本上不关机,偶尔需要从其他电脑取些文件或远程处理点工作上的事,就非常需要这样的软件。2023年部署的时候 FRP 最新版本是 FRP 0.51.2,使用 docker 部署,从 0.52.0 开始,FRP 将配置文件由 ini 格式改为 toml 格式了,格式变更后,导致原来的配置文件不能复用,便一直未升级到最新版。年底趁手头紧要工作处理完了,便想着顺带升级一下。服务端升级服务端部署在腾讯云一台国内服务器上,使用 docker compose 编排,升级后的 docker-compose.yaml 文件内容如下:services: frps_work: restart: always network_mode: host volumes: - ./frps.toml:/etc/frp/frps.toml - ./logs:/etc/frp/logs container_name: frps_work image: snowdreamtech/frps:0.61.1frps.toml 文件内容如下:bindPort = 7200 auth.method = "token" auth.token = "admin" vhostHTTPPort = 89 vhostHTTPSPort = 4444 subDomainHost = "work.zhuzhilong.com" enablePrometheus = true webServer.addr = "0.0.0.0" webServer.port = 7202 # 由于使用nginx代理的时候开启了基于 webauth 的认证,这里我就不开启了 #webServer.user = "zzl" #webServer.password = "password"上面的配置中,我们配置了一个89端口的http服务,并使用 .work.zhuzhilong.com 的泛域名作为二级域名后缀。我们需要使用nginxProxyManager 配置一个代理,将 .work.zhuzhilong.com 的请求都代理到 89 端口:开启 SSL,这样代理的服务可以同时启用 http 服务及 https 服务访问:客户端升级之前买了一台迷你主机放在公司,作为开发服务器使用,使用的 Ubuntu 操作系统,使用 docker 部署的frpc客户端,修改后的 docker-compose.yaml 文件内容如下:services: frpczzl: restart: always network_mode: host volumes: # - ./frpc.ini:/etc/frp/frpc.ini - ./frpc.toml:/etc/frp/frpc.toml - ./logs/:/etc/frp/logs/ container_name: frpczzl # image: snowdreamtech/frpc:0.51.2 image: snowdreamtech/frpc:0.61.1frpc.toml 核心内容如下:user = "work" serverAddr = "119.29.149.159" serverPort = 7200 auth.token = "admin" # for tcp [[proxies]] name = "work-mysql-3306" type = "tcp" localIP = "127.0.0.1" localPort = 3306 remotePort = 3402 # for web [[proxies]] name = "work-http-123" httpUser = "zhuzl" httpPassword = "zhuzl" type = "http" localIP = "127.0.0.1" localPort = 80 subdomain = "123" [[proxies]] name = "work-http-spug" type = "http" localIP = "127.0.0.1" localPort = 80 subdomain = "spug" dashboardfrps中我们开启的webServer,可以通过 7202 端口查看 dashboard,界面截图如下:相关链接:Github:https://github.com/fatedier/frp官网:https://gofrp.org/

FRP升级记录 背景介绍FRP 是一款非常优秀的内网穿透和反向代理软件,它允许用户通过公网访问内网资源,无需复杂的网络配置,极大地提高了工作效率。程序员的电脑基本上不关机,偶尔需要从其他电脑取些文件或远程处理点工作上的事,就非常需要这样的软件。2023年部署的时候 FRP 最新版本是 FRP 0.51.2,使用 docker 部署,从 0.52.0 开始,FRP 将配置文件由 ini 格式改为 toml 格式了,格式变更后,导致原来的配置文件不能复用,便一直未升级到最新版。年底趁手头紧要工作处理完了,便想着顺带升级一下。服务端升级服务端部署在腾讯云一台国内服务器上,使用 docker compose 编排,升级后的 docker-compose.yaml 文件内容如下:services: frps_work: restart: always network_mode: host volumes: - ./frps.toml:/etc/frp/frps.toml - ./logs:/etc/frp/logs container_name: frps_work image: snowdreamtech/frps:0.61.1frps.toml 文件内容如下:bindPort = 7200 auth.method = "token" auth.token = "admin" vhostHTTPPort = 89 vhostHTTPSPort = 4444 subDomainHost = "work.zhuzhilong.com" enablePrometheus = true webServer.addr = "0.0.0.0" webServer.port = 7202 # 由于使用nginx代理的时候开启了基于 webauth 的认证,这里我就不开启了 #webServer.user = "zzl" #webServer.password = "password"上面的配置中,我们配置了一个89端口的http服务,并使用 .work.zhuzhilong.com 的泛域名作为二级域名后缀。我们需要使用nginxProxyManager 配置一个代理,将 .work.zhuzhilong.com 的请求都代理到 89 端口:开启 SSL,这样代理的服务可以同时启用 http 服务及 https 服务访问:客户端升级之前买了一台迷你主机放在公司,作为开发服务器使用,使用的 Ubuntu 操作系统,使用 docker 部署的frpc客户端,修改后的 docker-compose.yaml 文件内容如下:services: frpczzl: restart: always network_mode: host volumes: # - ./frpc.ini:/etc/frp/frpc.ini - ./frpc.toml:/etc/frp/frpc.toml - ./logs/:/etc/frp/logs/ container_name: frpczzl # image: snowdreamtech/frpc:0.51.2 image: snowdreamtech/frpc:0.61.1frpc.toml 核心内容如下:user = "work" serverAddr = "119.29.149.159" serverPort = 7200 auth.token = "admin" # for tcp [[proxies]] name = "work-mysql-3306" type = "tcp" localIP = "127.0.0.1" localPort = 3306 remotePort = 3402 # for web [[proxies]] name = "work-http-123" httpUser = "zhuzl" httpPassword = "zhuzl" type = "http" localIP = "127.0.0.1" localPort = 80 subdomain = "123" [[proxies]] name = "work-http-spug" type = "http" localIP = "127.0.0.1" localPort = 80 subdomain = "spug" dashboardfrps中我们开启的webServer,可以通过 7202 端口查看 dashboard,界面截图如下:相关链接:Github:https://github.com/fatedier/frp官网:https://gofrp.org/ -

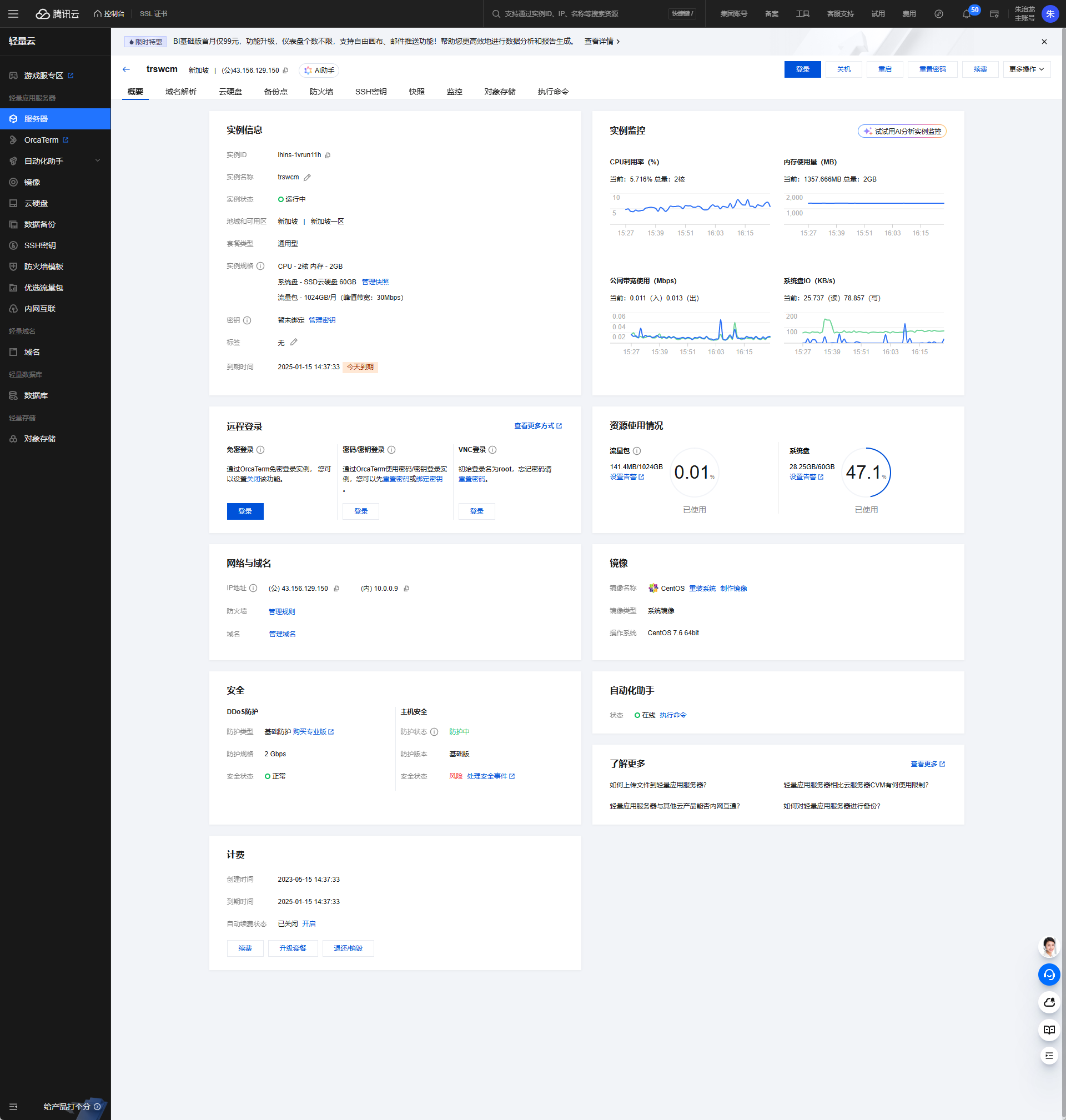

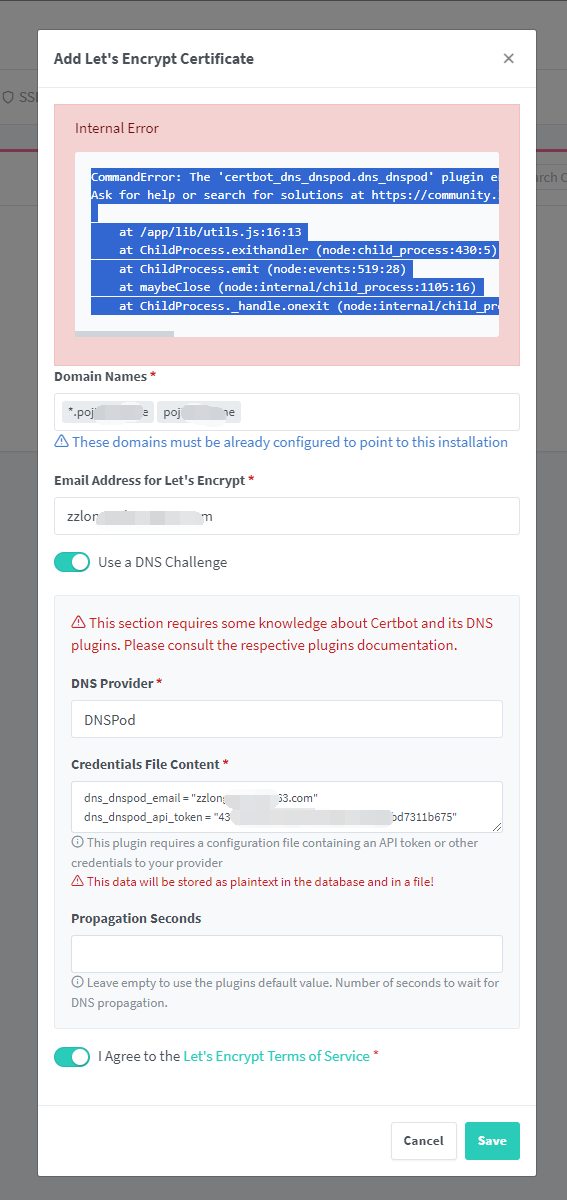

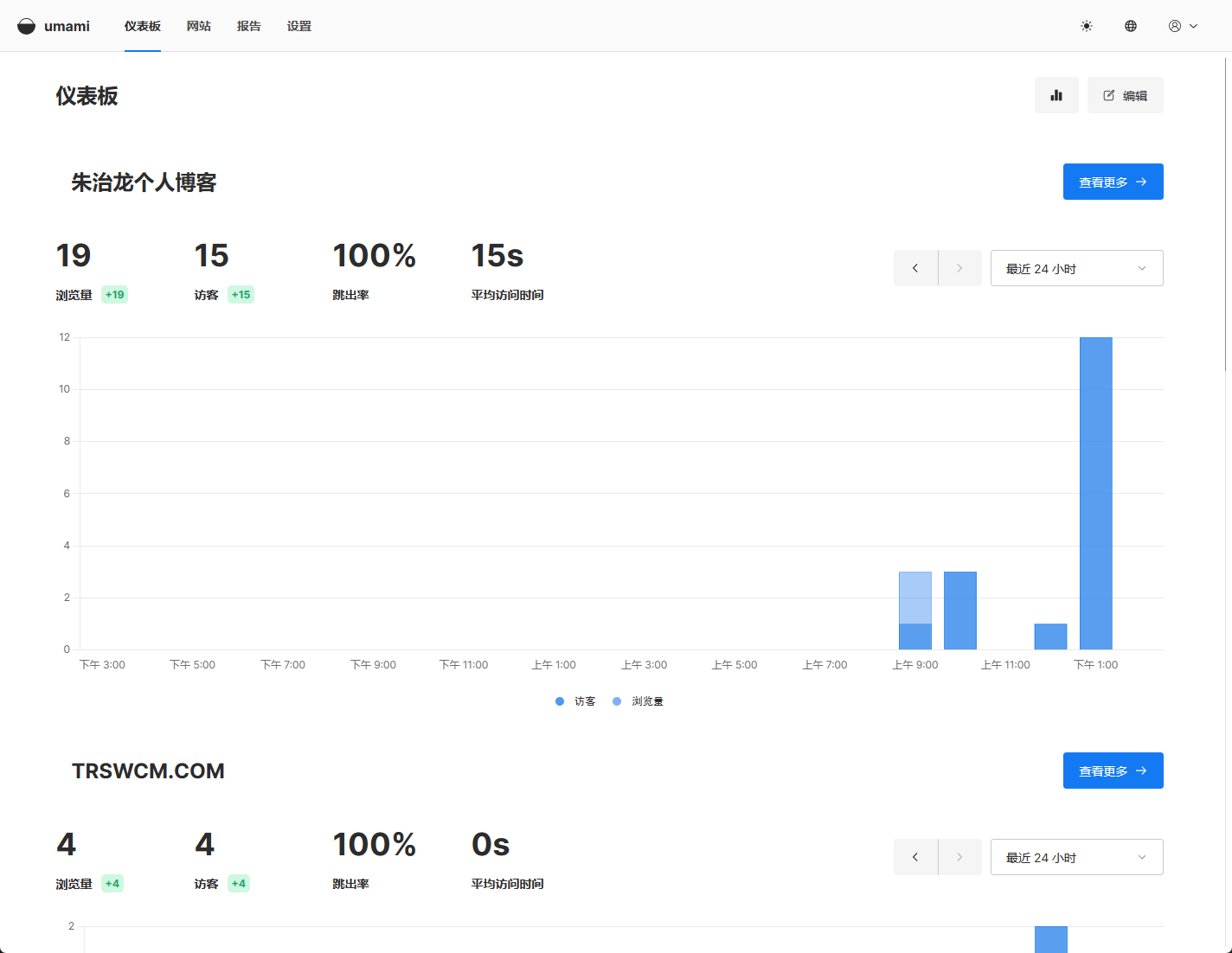

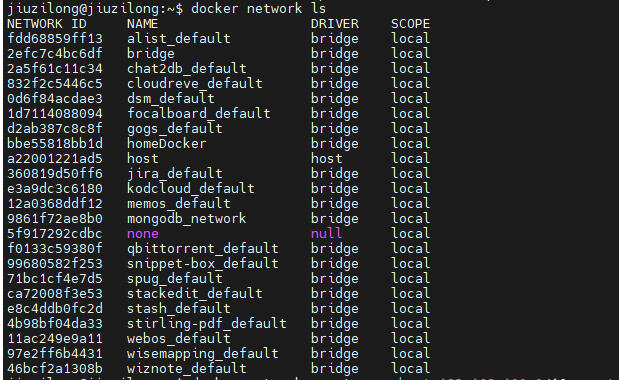

个人博客服务器迁移记录 背景说明由于 zhuzhilong.cn 域名未备案,在国内不能直接访问,于2023年5月15日,在腾讯云买了台轻量应用服务器(配置:2H2G60G30M)来部署未备案的网站,我的个人博客也迁移到了这个服务器上,服务器35元/月,价格也不算贵,一年的服务器费用大概35*12=420元,服务器基本信息如下:去年腾讯云搞活动,有一款配置差不多的轻量应用服务器只需要99元一年,并且可以续费两年:便新购了另外一台轻量应用服务器(配置:2H2G50G30M):这样算下来 3 年只需要 99+99+59.4=257.4 元,也就是3年的费用相当于原服务器一年费用的一半多一点,作为非营运网站,也没有较高的负载,能省一点是一点吧。服务器环境说明原服务器上部署了如下环境:宝塔面板MySQL 5.7php 5.6php 7.3以上环境都是基于宝塔面板进行集成安装的,宝塔面板在管理上挺方便,但是占用资源较多,且存在服务器信息泄露的风险,新服务器便计划采用如下环境:Dockerdocker compose + NginxProxyManagerdocker compose + php 5.6 Z + www.trswcm.comdocker compose + php 7.3 + blog.zhuzhilong.cn新服务器部署记录1、安装 DockerDocker 的安装参考 官网的安装文档(https://docs.docker.com/engine/install/)进行安装即可,如果是国内环境的话,推荐使用阿里云镜像2、添加 Docker 桥接网络推荐使用自定义 Docker 桥接网络,之前有服务器跑的容器多了,到后面会报创建网络失败的情况docker network create --subnet=172.66.0.0/16 --gateway=172.66.0.1 --opt "com.docker.network.bridge.default_bridge"="false" --opt "com.docker.network.bridge.name"="bridge_zzl" --opt "com.docker.network.bridge.enable_icc"="true" --opt "com.docker.network.bridge.enable_ip_masquerade"="true" --opt "com.docker.network.bridge.host_binding_ipv4"="0.0.0.0" --opt "com.docker.network.driver.mtu"="1500" bridge_zzl3、使用docker compose 部署 MySQL 5.7services: mysql: image: mysql:5.7 container_name: mysql-5.7 #使用该参数,container内的root拥有真正的root权限,否则,container内的root只是外部的一个普通用户权限 #设置为true,不然数据卷可能挂载不了,启动不起 privileged: true restart: always networks: - net-zzl ports: - "3316:3306" environment: MYSQL_ROOT_PASSWORD: PASSWORD123 MYSQL_USER: zhuzl MYSQL_PASSWORD: PASSWORD123 TZ: Asia/Shanghai command: --wait_timeout=31536000 --interactive_timeout=31536000 --max_connections=1000 --default-authentication-plugin=mysql_native_password volumes: #映射mysql的数据目录到宿主机,保存数据 #- ./data:/var/lib/mysql - ./data:/www/server/data #根据宿主机下的配置文件创建容器 - ./config/my.cnf:/etc/mysql/my.cnf - ../hosts:/etc/hosts networks: net-zzl: name: bridge_zzl external: true config/my.cnf 内容:[client] #password = your_password port = 3306 socket = /tmp/mysql.sock default-character-set=utf8mb4 [mysqld] port = 3306 socket = /tmp/mysql.sock datadir = /www/server/data default_storage_engine = InnoDB character-set-server=utf8mb4 collation-server=utf8mb4_unicode_ci performance_schema_max_table_instances = 400 table_definition_cache = 400 skip-external-locking key_buffer_size = 64M max_allowed_packet = 1G table_open_cache = 128 sort_buffer_size = 16M net_buffer_length = 4K read_buffer_size = 16M read_rnd_buffer_size = 256K myisam_sort_buffer_size = 256M thread_cache_size = 512 tmp_table_size = 32M default_authentication_plugin = mysql_native_password lower_case_table_names = 1 sql-mode=NO_ENGINE_SUBSTITUTION,STRICT_TRANS_TABLES explicit_defaults_for_timestamp = true #skip-name-resolve max_connections = 500 max_connect_errors = 100 open_files_limit = 65535 log-bin=mysql-bin binlog_format=mixed server-id = 1 # binlog_expire_logs_seconds = 600000 slow_query_log=1 slow-query-log-file=/www/server/data/mysql-slow.log long_query_time=3 #log_queries_not_using_indexes=on early-plugin-load = "" innodb_data_home_dir = /www/server/data innodb_data_file_path = ibdata1:10M:autoextend innodb_log_group_home_dir = /www/server/data innodb_buffer_pool_size = 4096M innodb_log_file_size = 2048M innodb_log_buffer_size = 512M innodb_flush_log_at_trx_commit = 1 innodb_lock_wait_timeout = 50 innodb_max_dirty_pages_pct = 90 innodb_read_io_threads = 16 innodb_write_io_threads = 16 [mysqldump] quick max_allowed_packet = 500M [mysql] no-auto-rehash default-character-set=utf8mb4 [myisamchk] key_buffer_size = 64M sort_buffer_size = 16M read_buffer = 2M write_buffer = 2M [mysqlhotcopy] interactive-timeout 4、使用docker compose 部署 nginxProxyManager官网部署文档:https://nginxproxymanager.com/guide/#quick-setupdocker-compose.yml 文件内容如下:services: npm: image: jc21/nginx-proxy-manager:latest container_name: npm restart: unless-stopped networks: - net-zzl environment: - ACME_AGREE=true - TZ=Asia/Shanghai ports: - 80:80 - 443:443 - 81:81 volumes: - ./data:/data - ./letsencrypt:/etc/letsencrypt - ../hosts:/etc/hosts networks: net-zzl: name: bridge_zzl external: true5、使用docker compose 部署 blog.zhuzhilong.cn 个人博客我的个人博客(blog.zhuzhilong.cn)使用 Typecho 搭建,核心需要的环境 是 php 7.x + mysql.docker-compose.yml 内容如下:services: zzlblog: image: nginx:latest container_name: zzlblog networks: - net-zzl ports: - 8100:80 environment: - TZ=Asia/Shanghai restart: always volumes: - ./www:/var/www/html - ./logs:/var/log/nginx - ./nginx:/etc/nginx/conf.d - ../hosts:/etc/hosts depends_on: - php73 php73: image: yearnfar/typecho-php:latest #image: tsund/php:7.2-fpm-alpine container_name: php73 restart: unless-stopped networks: - net-zzl ports: - 9073:9000 environment: - TZ=Asia/Shanghai volumes: - ./www:/var/www/html - ../hosts:/etc/hosts networks: net-zzl: name: bridge_zzl external: trueyearnfar/typecho-php:latest 镜像主要是参考 https://github.com/yearnfar/typecho-docker 在服务器上直接 build 生成的的镜像。nginx/default.conf 文件内容如下:server { listen 80; server_name localhost; root /var/www/html; index index.php; access_log /var/log/nginx/zzlblog_access.log main; error_log /var/log/nginx/zzlblog_error.log; if (!-e $request_filename) { rewrite ^(.*)$ /index.php$1 last; } location ~ [^/]\.php(/|$) { root /var/www/html; fastcgi_pass php73:9000; proxy_set_header Host $host; #fastcgi_pass hostserver:9073; fastcgi_index index.php; #ifastcgi_param PATH_INFO $fastcgi_path_info; #fastcgi_param PATH_TRANSLATED $document_root$fastcgi_path_info; #fastcgi_param SCRIPT_NAME $fastcgi_script_name; # fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name; fastcgi_param SCRIPT_FILENAME /var/www/html$fastcgi_script_name; include fastcgi_params; } }5、使用docker compose 部署 www.trswcm.comwww.trswcm.com 是我维护了十多年的一个小众网站,主要是十多年前做TRS产品实施的时候分享一些项目实施经验,十多年过去已经很少更新了,不过一天还是有十多 UV 的访问量,还能帮助到一小部分特定人群,使用 QYKCMS 搭建,核心需要的环境 是 php 5.x+ mysql.docker-compose.yml 文件内容如下:services: trswcm: image: nginx container_name: trswcm networks: - net-zzl ports: - 8101:80 environment: - TZ=Asia/Shanghai restart: always volumes: - ./www:/var/www/html - ./logs:/var/log/nginx - ./nginx:/etc/nginx/conf.d - ../hosts:/etc/hosts depends_on: - php56 php56: image: raccourci/php56:latest container_name: php56 restart: unless-stopped networks: - net-zzl ports: - 9056:9000 environment: - TZ=Asia/Shanghai volumes: - ./www:/var/www/html - ../hosts:/etc/hosts networks: net-zzl: name: bridge_zzl external: truenginx/default.conf 文件内容如下:server { listen 80; server_name www.trswcm.com localhost; root /var/www/html; index index.html index.htm index.php default.php default.htm default.html; access_log /var/log/nginx/trswcm_access.log main; error_log /var/log/nginx/trswcm_error.log; #if (!-e $request_filename) { # rewrite ^(.*)$ /index.php$1 last; #} location ~ [^/]\.php(/|$) { root /var/www/html; fastcgi_pass php56:9000; proxy_set_header Host www.trswcm.com; #fastcgi_pass hostserver:9073; fastcgi_index index.php; #ifastcgi_param PATH_INFO $fastcgi_path_info; #fastcgi_param PATH_TRANSLATED $document_root$fastcgi_path_info; #fastcgi_param SCRIPT_NAME $fastcgi_script_name; # fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name; fastcgi_param SCRIPT_FILENAME /var/www/html$fastcgi_script_name; include fastcgi_params; } }NginxProxyManager 配置Nginx Proxy Manager (NPM) 是一个基于 Nginx 的反向代理管理工具,旨在简化 Nginx 的配置和管理。它提供了一个直观的 Web 界面,使用户可以轻松地设置和管理反向代理、SSL 证书、访问控制等功能。在上面的章节中,我们使用 docker compose 的方式运行了 NginxProxyManager,我们可以通过UI界面进行可视化配置,最重要的是SSL证书配置及代理配置,以下是部分界面截图:

个人博客服务器迁移记录 背景说明由于 zhuzhilong.cn 域名未备案,在国内不能直接访问,于2023年5月15日,在腾讯云买了台轻量应用服务器(配置:2H2G60G30M)来部署未备案的网站,我的个人博客也迁移到了这个服务器上,服务器35元/月,价格也不算贵,一年的服务器费用大概35*12=420元,服务器基本信息如下:去年腾讯云搞活动,有一款配置差不多的轻量应用服务器只需要99元一年,并且可以续费两年:便新购了另外一台轻量应用服务器(配置:2H2G50G30M):这样算下来 3 年只需要 99+99+59.4=257.4 元,也就是3年的费用相当于原服务器一年费用的一半多一点,作为非营运网站,也没有较高的负载,能省一点是一点吧。服务器环境说明原服务器上部署了如下环境:宝塔面板MySQL 5.7php 5.6php 7.3以上环境都是基于宝塔面板进行集成安装的,宝塔面板在管理上挺方便,但是占用资源较多,且存在服务器信息泄露的风险,新服务器便计划采用如下环境:Dockerdocker compose + NginxProxyManagerdocker compose + php 5.6 Z + www.trswcm.comdocker compose + php 7.3 + blog.zhuzhilong.cn新服务器部署记录1、安装 DockerDocker 的安装参考 官网的安装文档(https://docs.docker.com/engine/install/)进行安装即可,如果是国内环境的话,推荐使用阿里云镜像2、添加 Docker 桥接网络推荐使用自定义 Docker 桥接网络,之前有服务器跑的容器多了,到后面会报创建网络失败的情况docker network create --subnet=172.66.0.0/16 --gateway=172.66.0.1 --opt "com.docker.network.bridge.default_bridge"="false" --opt "com.docker.network.bridge.name"="bridge_zzl" --opt "com.docker.network.bridge.enable_icc"="true" --opt "com.docker.network.bridge.enable_ip_masquerade"="true" --opt "com.docker.network.bridge.host_binding_ipv4"="0.0.0.0" --opt "com.docker.network.driver.mtu"="1500" bridge_zzl3、使用docker compose 部署 MySQL 5.7services: mysql: image: mysql:5.7 container_name: mysql-5.7 #使用该参数,container内的root拥有真正的root权限,否则,container内的root只是外部的一个普通用户权限 #设置为true,不然数据卷可能挂载不了,启动不起 privileged: true restart: always networks: - net-zzl ports: - "3316:3306" environment: MYSQL_ROOT_PASSWORD: PASSWORD123 MYSQL_USER: zhuzl MYSQL_PASSWORD: PASSWORD123 TZ: Asia/Shanghai command: --wait_timeout=31536000 --interactive_timeout=31536000 --max_connections=1000 --default-authentication-plugin=mysql_native_password volumes: #映射mysql的数据目录到宿主机,保存数据 #- ./data:/var/lib/mysql - ./data:/www/server/data #根据宿主机下的配置文件创建容器 - ./config/my.cnf:/etc/mysql/my.cnf - ../hosts:/etc/hosts networks: net-zzl: name: bridge_zzl external: true config/my.cnf 内容:[client] #password = your_password port = 3306 socket = /tmp/mysql.sock default-character-set=utf8mb4 [mysqld] port = 3306 socket = /tmp/mysql.sock datadir = /www/server/data default_storage_engine = InnoDB character-set-server=utf8mb4 collation-server=utf8mb4_unicode_ci performance_schema_max_table_instances = 400 table_definition_cache = 400 skip-external-locking key_buffer_size = 64M max_allowed_packet = 1G table_open_cache = 128 sort_buffer_size = 16M net_buffer_length = 4K read_buffer_size = 16M read_rnd_buffer_size = 256K myisam_sort_buffer_size = 256M thread_cache_size = 512 tmp_table_size = 32M default_authentication_plugin = mysql_native_password lower_case_table_names = 1 sql-mode=NO_ENGINE_SUBSTITUTION,STRICT_TRANS_TABLES explicit_defaults_for_timestamp = true #skip-name-resolve max_connections = 500 max_connect_errors = 100 open_files_limit = 65535 log-bin=mysql-bin binlog_format=mixed server-id = 1 # binlog_expire_logs_seconds = 600000 slow_query_log=1 slow-query-log-file=/www/server/data/mysql-slow.log long_query_time=3 #log_queries_not_using_indexes=on early-plugin-load = "" innodb_data_home_dir = /www/server/data innodb_data_file_path = ibdata1:10M:autoextend innodb_log_group_home_dir = /www/server/data innodb_buffer_pool_size = 4096M innodb_log_file_size = 2048M innodb_log_buffer_size = 512M innodb_flush_log_at_trx_commit = 1 innodb_lock_wait_timeout = 50 innodb_max_dirty_pages_pct = 90 innodb_read_io_threads = 16 innodb_write_io_threads = 16 [mysqldump] quick max_allowed_packet = 500M [mysql] no-auto-rehash default-character-set=utf8mb4 [myisamchk] key_buffer_size = 64M sort_buffer_size = 16M read_buffer = 2M write_buffer = 2M [mysqlhotcopy] interactive-timeout 4、使用docker compose 部署 nginxProxyManager官网部署文档:https://nginxproxymanager.com/guide/#quick-setupdocker-compose.yml 文件内容如下:services: npm: image: jc21/nginx-proxy-manager:latest container_name: npm restart: unless-stopped networks: - net-zzl environment: - ACME_AGREE=true - TZ=Asia/Shanghai ports: - 80:80 - 443:443 - 81:81 volumes: - ./data:/data - ./letsencrypt:/etc/letsencrypt - ../hosts:/etc/hosts networks: net-zzl: name: bridge_zzl external: true5、使用docker compose 部署 blog.zhuzhilong.cn 个人博客我的个人博客(blog.zhuzhilong.cn)使用 Typecho 搭建,核心需要的环境 是 php 7.x + mysql.docker-compose.yml 内容如下:services: zzlblog: image: nginx:latest container_name: zzlblog networks: - net-zzl ports: - 8100:80 environment: - TZ=Asia/Shanghai restart: always volumes: - ./www:/var/www/html - ./logs:/var/log/nginx - ./nginx:/etc/nginx/conf.d - ../hosts:/etc/hosts depends_on: - php73 php73: image: yearnfar/typecho-php:latest #image: tsund/php:7.2-fpm-alpine container_name: php73 restart: unless-stopped networks: - net-zzl ports: - 9073:9000 environment: - TZ=Asia/Shanghai volumes: - ./www:/var/www/html - ../hosts:/etc/hosts networks: net-zzl: name: bridge_zzl external: trueyearnfar/typecho-php:latest 镜像主要是参考 https://github.com/yearnfar/typecho-docker 在服务器上直接 build 生成的的镜像。nginx/default.conf 文件内容如下:server { listen 80; server_name localhost; root /var/www/html; index index.php; access_log /var/log/nginx/zzlblog_access.log main; error_log /var/log/nginx/zzlblog_error.log; if (!-e $request_filename) { rewrite ^(.*)$ /index.php$1 last; } location ~ [^/]\.php(/|$) { root /var/www/html; fastcgi_pass php73:9000; proxy_set_header Host $host; #fastcgi_pass hostserver:9073; fastcgi_index index.php; #ifastcgi_param PATH_INFO $fastcgi_path_info; #fastcgi_param PATH_TRANSLATED $document_root$fastcgi_path_info; #fastcgi_param SCRIPT_NAME $fastcgi_script_name; # fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name; fastcgi_param SCRIPT_FILENAME /var/www/html$fastcgi_script_name; include fastcgi_params; } }5、使用docker compose 部署 www.trswcm.comwww.trswcm.com 是我维护了十多年的一个小众网站,主要是十多年前做TRS产品实施的时候分享一些项目实施经验,十多年过去已经很少更新了,不过一天还是有十多 UV 的访问量,还能帮助到一小部分特定人群,使用 QYKCMS 搭建,核心需要的环境 是 php 5.x+ mysql.docker-compose.yml 文件内容如下:services: trswcm: image: nginx container_name: trswcm networks: - net-zzl ports: - 8101:80 environment: - TZ=Asia/Shanghai restart: always volumes: - ./www:/var/www/html - ./logs:/var/log/nginx - ./nginx:/etc/nginx/conf.d - ../hosts:/etc/hosts depends_on: - php56 php56: image: raccourci/php56:latest container_name: php56 restart: unless-stopped networks: - net-zzl ports: - 9056:9000 environment: - TZ=Asia/Shanghai volumes: - ./www:/var/www/html - ../hosts:/etc/hosts networks: net-zzl: name: bridge_zzl external: truenginx/default.conf 文件内容如下:server { listen 80; server_name www.trswcm.com localhost; root /var/www/html; index index.html index.htm index.php default.php default.htm default.html; access_log /var/log/nginx/trswcm_access.log main; error_log /var/log/nginx/trswcm_error.log; #if (!-e $request_filename) { # rewrite ^(.*)$ /index.php$1 last; #} location ~ [^/]\.php(/|$) { root /var/www/html; fastcgi_pass php56:9000; proxy_set_header Host www.trswcm.com; #fastcgi_pass hostserver:9073; fastcgi_index index.php; #ifastcgi_param PATH_INFO $fastcgi_path_info; #fastcgi_param PATH_TRANSLATED $document_root$fastcgi_path_info; #fastcgi_param SCRIPT_NAME $fastcgi_script_name; # fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name; fastcgi_param SCRIPT_FILENAME /var/www/html$fastcgi_script_name; include fastcgi_params; } }NginxProxyManager 配置Nginx Proxy Manager (NPM) 是一个基于 Nginx 的反向代理管理工具,旨在简化 Nginx 的配置和管理。它提供了一个直观的 Web 界面,使用户可以轻松地设置和管理反向代理、SSL 证书、访问控制等功能。在上面的章节中,我们使用 docker compose 的方式运行了 NginxProxyManager,我们可以通过UI界面进行可视化配置,最重要的是SSL证书配置及代理配置,以下是部分界面截图: -

docker 版 gitlab 配置邮件推送 背景介绍作为一个老派程序员,偶尔能接到一些私单,不少私单涉及到代码安全问题,就不是很适合用Gitlab、Gitee 等在线代码托管服务了,于是在我的开发服务器上自己搭建了一个 git 服务,可选的git 服务有很多,如::Gitea、Gogs、Gitlab 等,由于公司环境基本上用 Gitlab,且Gitlab 功能足够强大,所以便选择了 Gitlab 作为服务端,Gitlab 在国内成立了极狐公司(https://gitlab.cn/)专门运营国内的 Gitlab,在部署的时候也就选择了 Gitlab 的极狐版本,部署的完整 docker-compose.yml 如下:services: gitlab: image: 'registry.gitlab.cn/omnibus/gitlab-jh:latest' # image: 'registry.gitlab.cn/omnibus/gitlab-jh:16.11.3' #image: 'registry.gitlab.cn/omnibus/gitlab-jh:16.7.7' restart: always container_name: gitlab hostname: 'git.work.zhuzhilong.com' environment: GITLAB_OMNIBUS_CONFIG: | external_url 'http://git.work.zhuzhilong.com' # Add any other gitlab.rb configuration here, each on its own line alertmanager['enable']=false networks: - net-zzl ports: - '8007:80' - '2223:22' volumes: - './config:/etc/gitlab' - './logs:/var/log/gitlab' - './data:/var/opt/gitlab' - ../hosts:/etc/hosts - /etc/timezone:/etc/timezone:ro - /etc/localtime:/etc/localtime:ro shm_size: '256m' networks: net-zzl: name: bridge_zzl external: true在开发过程中,涉及多成员协作,而 Gitlab 自带的邮件服务还是很有必要的,所以本次我们就将我们的 gitlab 服务搭上邮件的快车。配置过程如下:1、进入 docker 容器docker exec -it gitlab /bin/bash2、编辑 /etc/gitlab/gitlab.rb 文件{alert type="info"}为避免出错,可在更改配置前备份系统,备份命令为:gitlab-rake gitlab:backup:create{/alert}修改涉及邮件发送相关的服务,主要配置信息如下:### GitLab email server settings ###! Docs: https://docs.gitlab.com/omnibus/settings/smtp.html ###! **Use smtp instead of sendmail/postfix.** gitlab_rails['smtp_enable'] = true gitlab_rails['smtp_address'] = "smtp.feishu.cn" gitlab_rails['smtp_port'] = 465 gitlab_rails['smtp_user_name'] = "sender@zhuzhilong.com" gitlab_rails['smtp_password'] = "xxxxxxxx" gitlab_rails['smtp_domain'] = "mail.feishu.cn" gitlab_rails['smtp_authentication'] = "login" # gitlab_rails['smtp_enable_starttls_auto'] = true gitlab_rails['smtp_tls'] = true gitlab_rails['smtp_pool'] = false ###! **Can be: 'none', 'peer', 'client_once', 'fail_if_no_peer_cert'** ###! Docs: http://api.rubyonrails.org/classes/ActionMailer/Base.html # gitlab_rails['smtp_openssl_verify_mode'] = 'none' # gitlab_rails['smtp_ca_path'] = "/etc/ssl/certs" # gitlab_rails['smtp_ca_file'] = "/etc/ssl/certs/ca-certificates.crt" ### Email Settings gitlab_rails['gitlab_email_enabled'] = true ##! If your SMTP server does not like the default 'From: gitlab@gitlab.example.com' ##! can change the 'From' with this setting. gitlab_rails['gitlab_email_from'] = 'xxxx@zhuzhilong.com' gitlab_rails['gitlab_email_display_name'] = '朱治龙git' gitlab_rails['gitlab_email_reply_to'] = 'reply@zhuzhilong.com' # gitlab_rails['gitlab_email_subject_suffix'] = '' # gitlab_rails['gitlab_email_smime_enabled'] = false # gitlab_rails['gitlab_email_smime_key_file'] = '/etc/gitlab/ssl/gitlab_smime.key' # gitlab_rails['gitlab_email_smime_cert_file'] = '/etc/gitlab/ssl/gitlab_smime.crt' # gitlab_rails['gitlab_email_smime_ca_certs_file'] = '/etc/gitlab/ssl/gitlab_smime_cas.crt' 3、使配置生效并重启服务gitlab-ctl reconfigure && gitlab-ctl restart4、验证邮件发送服务可在个人资料 -> 电子邮件 中添加新的邮件地址:添加后,对应的邮箱会收到如下验证邮件即表示配置成功了:

docker 版 gitlab 配置邮件推送 背景介绍作为一个老派程序员,偶尔能接到一些私单,不少私单涉及到代码安全问题,就不是很适合用Gitlab、Gitee 等在线代码托管服务了,于是在我的开发服务器上自己搭建了一个 git 服务,可选的git 服务有很多,如::Gitea、Gogs、Gitlab 等,由于公司环境基本上用 Gitlab,且Gitlab 功能足够强大,所以便选择了 Gitlab 作为服务端,Gitlab 在国内成立了极狐公司(https://gitlab.cn/)专门运营国内的 Gitlab,在部署的时候也就选择了 Gitlab 的极狐版本,部署的完整 docker-compose.yml 如下:services: gitlab: image: 'registry.gitlab.cn/omnibus/gitlab-jh:latest' # image: 'registry.gitlab.cn/omnibus/gitlab-jh:16.11.3' #image: 'registry.gitlab.cn/omnibus/gitlab-jh:16.7.7' restart: always container_name: gitlab hostname: 'git.work.zhuzhilong.com' environment: GITLAB_OMNIBUS_CONFIG: | external_url 'http://git.work.zhuzhilong.com' # Add any other gitlab.rb configuration here, each on its own line alertmanager['enable']=false networks: - net-zzl ports: - '8007:80' - '2223:22' volumes: - './config:/etc/gitlab' - './logs:/var/log/gitlab' - './data:/var/opt/gitlab' - ../hosts:/etc/hosts - /etc/timezone:/etc/timezone:ro - /etc/localtime:/etc/localtime:ro shm_size: '256m' networks: net-zzl: name: bridge_zzl external: true在开发过程中,涉及多成员协作,而 Gitlab 自带的邮件服务还是很有必要的,所以本次我们就将我们的 gitlab 服务搭上邮件的快车。配置过程如下:1、进入 docker 容器docker exec -it gitlab /bin/bash2、编辑 /etc/gitlab/gitlab.rb 文件{alert type="info"}为避免出错,可在更改配置前备份系统,备份命令为:gitlab-rake gitlab:backup:create{/alert}修改涉及邮件发送相关的服务,主要配置信息如下:### GitLab email server settings ###! Docs: https://docs.gitlab.com/omnibus/settings/smtp.html ###! **Use smtp instead of sendmail/postfix.** gitlab_rails['smtp_enable'] = true gitlab_rails['smtp_address'] = "smtp.feishu.cn" gitlab_rails['smtp_port'] = 465 gitlab_rails['smtp_user_name'] = "sender@zhuzhilong.com" gitlab_rails['smtp_password'] = "xxxxxxxx" gitlab_rails['smtp_domain'] = "mail.feishu.cn" gitlab_rails['smtp_authentication'] = "login" # gitlab_rails['smtp_enable_starttls_auto'] = true gitlab_rails['smtp_tls'] = true gitlab_rails['smtp_pool'] = false ###! **Can be: 'none', 'peer', 'client_once', 'fail_if_no_peer_cert'** ###! Docs: http://api.rubyonrails.org/classes/ActionMailer/Base.html # gitlab_rails['smtp_openssl_verify_mode'] = 'none' # gitlab_rails['smtp_ca_path'] = "/etc/ssl/certs" # gitlab_rails['smtp_ca_file'] = "/etc/ssl/certs/ca-certificates.crt" ### Email Settings gitlab_rails['gitlab_email_enabled'] = true ##! If your SMTP server does not like the default 'From: gitlab@gitlab.example.com' ##! can change the 'From' with this setting. gitlab_rails['gitlab_email_from'] = 'xxxx@zhuzhilong.com' gitlab_rails['gitlab_email_display_name'] = '朱治龙git' gitlab_rails['gitlab_email_reply_to'] = 'reply@zhuzhilong.com' # gitlab_rails['gitlab_email_subject_suffix'] = '' # gitlab_rails['gitlab_email_smime_enabled'] = false # gitlab_rails['gitlab_email_smime_key_file'] = '/etc/gitlab/ssl/gitlab_smime.key' # gitlab_rails['gitlab_email_smime_cert_file'] = '/etc/gitlab/ssl/gitlab_smime.crt' # gitlab_rails['gitlab_email_smime_ca_certs_file'] = '/etc/gitlab/ssl/gitlab_smime_cas.crt' 3、使配置生效并重启服务gitlab-ctl reconfigure && gitlab-ctl restart4、验证邮件发送服务可在个人资料 -> 电子邮件 中添加新的邮件地址:添加后,对应的邮箱会收到如下验证邮件即表示配置成功了: -

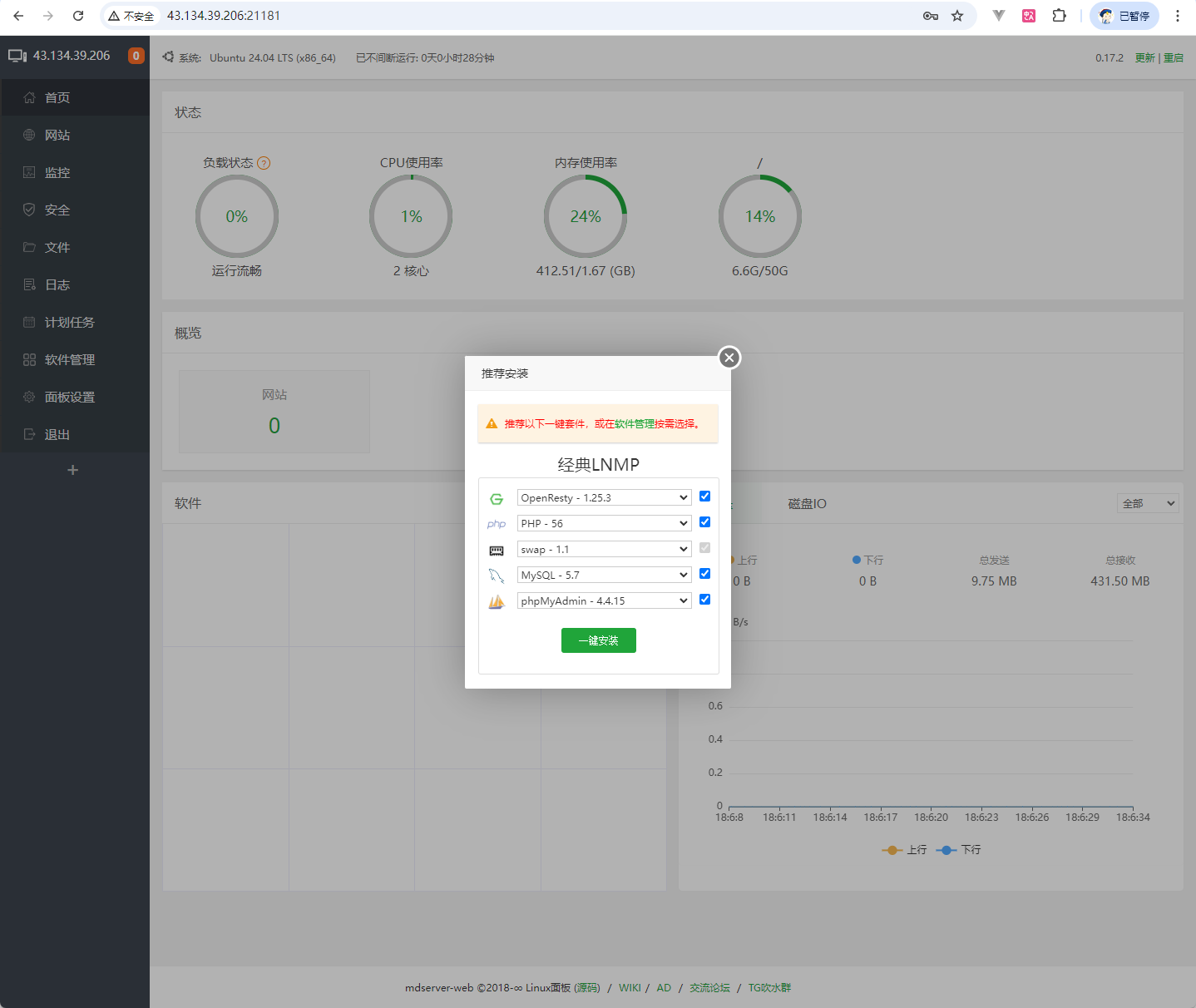

一款开源、免费、简单 Linux 服务器管理面板:mdserver-web安装及使用初体验 背景使用宝塔面板已有一段时间,它确实极大地简化了 Linux 系统的日常维护工作,实现了傻瓜式操作。然而,宝塔面板在用户信息收集和资源占用方面存在一些争议。此外,我也尝试过 1Panel,虽然它的界面现代且美观,但遗憾的是,资源占用反而高于宝塔面板,且在易用性上跟宝塔面板比起来还略逊一筹。最近,我在公众号上了解到一款开源、仿宝塔界面的MDServer-Web,截止当前(2024-09-29)在 Github 已获得 4k+ 的 star,便心生一试的想法。简介一款开源、免费、简单 Linux 服务器管理面板,MDServer-Web 与著名的宝塔面板相似,但更安全且支持多种功能。它安装简单,作者承诺不卖数据、不监控用户、不注入病毒,可以放心使用。软件特性:✅ SSH 终端工具✅ 面板收藏功能✅ 网站子目录绑定✅ 网站备份功能✅ 插件方式管理✅ 自动更新优化✅ 支持 OpenResty、PHP 5.2-8.1、MySQL、MongoDB、Memcached、Redis 等✅ 更多...安装安装命令从官网拷贝如下地址进行安装curl --insecure -fsSL https://cdn.jsdelivr.net/gh/midoks/mdserver-web@latest/scripts/install.sh | bash安装完成后显示如下信息:starting mw-tasks... done .stopping mw-tasks... done stopping mw-panel... cli.sh: line 20: 31687 Killed python3 task.py >> ${DIR}/logs/task.log 2>&1 done starting mw-tasks... done starting mw-panel... .........done ================================================================== MW-Panel default info! ================================================================== MW-Panel-Url: http://43.134.39.206:54724/4tt3ow6j username: tmsvus16 password: koaumgpj Warning: If you cannot access the panel. release the following port (54724|80|443|22) in the security group. ================================================================== Time consumed: 6 Minute!修改端口提供跟宝塔类似的命令行查看及修改面板信息root@VM-12-13-ubuntu:/data/dockerRoot# mw ===============mdserver-web cli tools================= (1) 重启面板服务 (2) 停止面板服务 (3) 启动面板服务 (4) 重载面板服务 (5) 修改面板端口 (10) 查看面板默认信息 (11) 修改面板密码 (12) 修改面板用户名 (13) 显示面板错误日志 (20) 关闭BasicAuth认证 (21) 解除域名绑定 (22) 解除面板SSL绑定 (23) 开启IPV6支持 (24) 关闭IPV6支持 (25) 开启防火墙SSH端口 (26) 关闭二次验证 (27) 查看防火墙信息 (100) 开启PHP52显示 (101) 关闭PHP52显示 (200) 切换Linux系统软件源 (201) 简单速度测试 (0) 取消 ====================================================== 请输入命令编号:5 请输入新的面板端口:21181 stopping mw-panel... done starting mw-panel... ...done nw================================================================== MW-Panel default info! ================================================================== MW-Panel-Url: http://43.134.39.206:21181/4tt3ow6j username: tmsvus16 password: koaumgpj Warning: If you cannot access the panel. release the following port (21181|80|443|22) in the security group. ==================================================================初体验根据提供的端口及登录信息,使用浏览器访问打开如下所示的主界面:应用安装:链接Github 开源地址:https://github.com/midoks/mdserver-web官网地址:http://www.midoks.icu/ 广告有些多,且没什么内容(2024-09-29)论坛:https://bbs.midoks.icu/

一款开源、免费、简单 Linux 服务器管理面板:mdserver-web安装及使用初体验 背景使用宝塔面板已有一段时间,它确实极大地简化了 Linux 系统的日常维护工作,实现了傻瓜式操作。然而,宝塔面板在用户信息收集和资源占用方面存在一些争议。此外,我也尝试过 1Panel,虽然它的界面现代且美观,但遗憾的是,资源占用反而高于宝塔面板,且在易用性上跟宝塔面板比起来还略逊一筹。最近,我在公众号上了解到一款开源、仿宝塔界面的MDServer-Web,截止当前(2024-09-29)在 Github 已获得 4k+ 的 star,便心生一试的想法。简介一款开源、免费、简单 Linux 服务器管理面板,MDServer-Web 与著名的宝塔面板相似,但更安全且支持多种功能。它安装简单,作者承诺不卖数据、不监控用户、不注入病毒,可以放心使用。软件特性:✅ SSH 终端工具✅ 面板收藏功能✅ 网站子目录绑定✅ 网站备份功能✅ 插件方式管理✅ 自动更新优化✅ 支持 OpenResty、PHP 5.2-8.1、MySQL、MongoDB、Memcached、Redis 等✅ 更多...安装安装命令从官网拷贝如下地址进行安装curl --insecure -fsSL https://cdn.jsdelivr.net/gh/midoks/mdserver-web@latest/scripts/install.sh | bash安装完成后显示如下信息:starting mw-tasks... done .stopping mw-tasks... done stopping mw-panel... cli.sh: line 20: 31687 Killed python3 task.py >> ${DIR}/logs/task.log 2>&1 done starting mw-tasks... done starting mw-panel... .........done ================================================================== MW-Panel default info! ================================================================== MW-Panel-Url: http://43.134.39.206:54724/4tt3ow6j username: tmsvus16 password: koaumgpj Warning: If you cannot access the panel. release the following port (54724|80|443|22) in the security group. ================================================================== Time consumed: 6 Minute!修改端口提供跟宝塔类似的命令行查看及修改面板信息root@VM-12-13-ubuntu:/data/dockerRoot# mw ===============mdserver-web cli tools================= (1) 重启面板服务 (2) 停止面板服务 (3) 启动面板服务 (4) 重载面板服务 (5) 修改面板端口 (10) 查看面板默认信息 (11) 修改面板密码 (12) 修改面板用户名 (13) 显示面板错误日志 (20) 关闭BasicAuth认证 (21) 解除域名绑定 (22) 解除面板SSL绑定 (23) 开启IPV6支持 (24) 关闭IPV6支持 (25) 开启防火墙SSH端口 (26) 关闭二次验证 (27) 查看防火墙信息 (100) 开启PHP52显示 (101) 关闭PHP52显示 (200) 切换Linux系统软件源 (201) 简单速度测试 (0) 取消 ====================================================== 请输入命令编号:5 请输入新的面板端口:21181 stopping mw-panel... done starting mw-panel... ...done nw================================================================== MW-Panel default info! ================================================================== MW-Panel-Url: http://43.134.39.206:21181/4tt3ow6j username: tmsvus16 password: koaumgpj Warning: If you cannot access the panel. release the following port (21181|80|443|22) in the security group. ==================================================================初体验根据提供的端口及登录信息,使用浏览器访问打开如下所示的主界面:应用安装:链接Github 开源地址:https://github.com/midoks/mdserver-web官网地址:http://www.midoks.icu/ 广告有些多,且没什么内容(2024-09-29)论坛:https://bbs.midoks.icu/ -

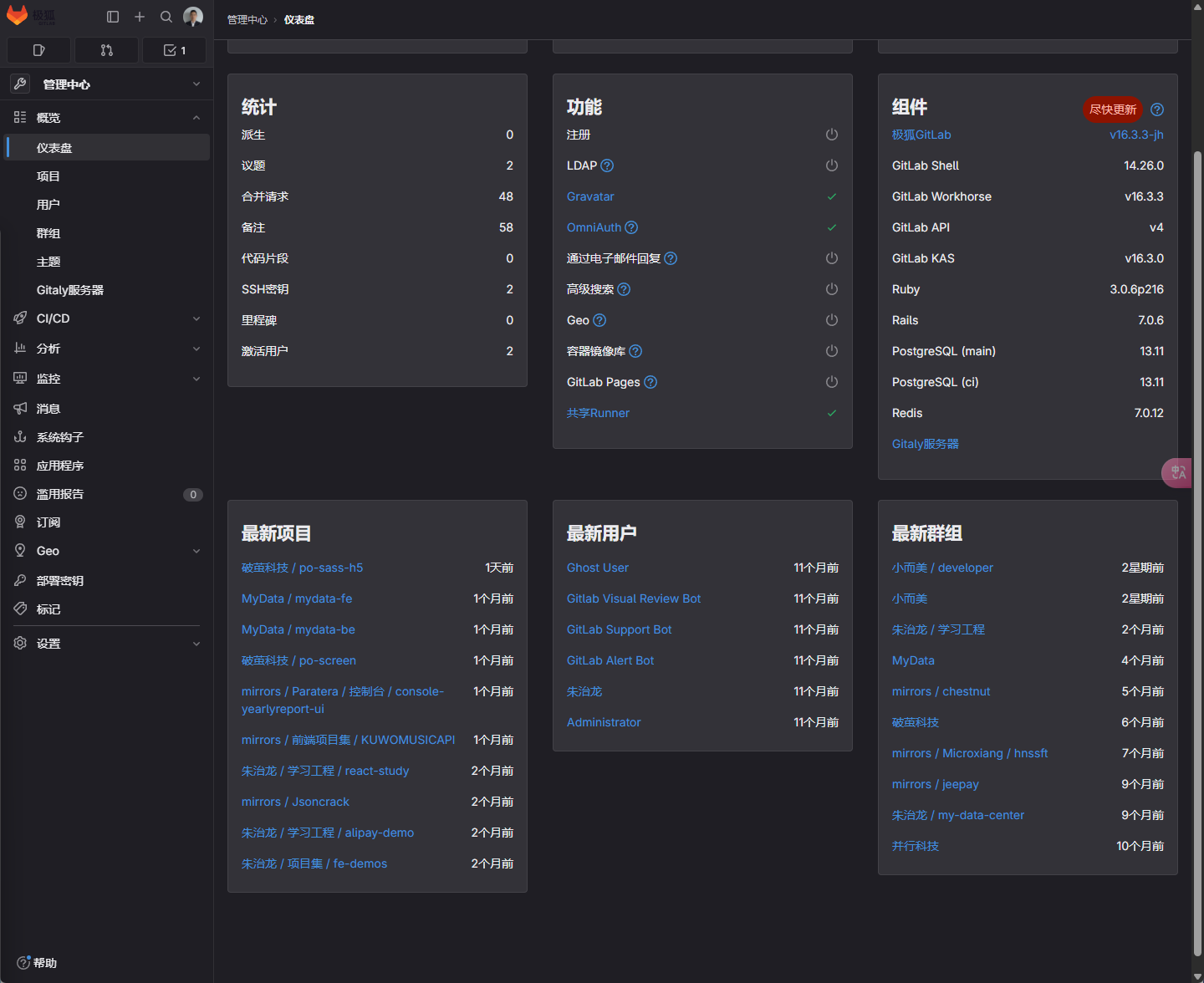

记一次升级gitlab的记录(16.3.3~17.3.1) 开发服务器的 gitlab 使用 docker 搭建,docker-compose.yml 文件内容如下:services: gitlab: image: 'registry.gitlab.cn/omnibus/gitlab-jh:latest' restart: always container_name: gitlab hostname: 'git.work.zhuzhilong.com' environment: GITLAB_OMNIBUS_CONFIG: | external_url 'http://git.work.zhuzhilong.com' # Add any other gitlab.rb configuration here, each on its own line networks: - net-zzl ports: - '8007:80' - '2223:22' volumes: - './config:/etc/gitlab' - './logs:/var/log/gitlab' - './data:/var/opt/gitlab' - ../hosts:/etc/hosts shm_size: '256m' networks: net-zzl: name: bridge_zzl external: true由于该 gitlab 搭建后一直未更新过,今天尝试个升级,升级前,我们先看看系统环境信息:由于 gitlab 用的镜像是 registry.gitlab.cn/omnibus/gitlab-jh:latest,我先尝试直接拉取一下最新的镜像看是否可以直接升级,拉取命令:docker compose pull,拉取过程如下:zhuzl@zhuzl-M9-PRO:/data/dockerRoot/apps/gitlab$ docker compose pull [+] Pulling 10/10 ✔ gitlab 9 layers [⣿⣿⣿⣿⣿⣿⣿⣿⣿] 0B/0B Pulled 62.1s ✔ 857cc8cb19c0 Already exists 0.0s ✔ d388127601d7 Pull complete 1.0s ✔ c973ce60899e Pull complete 1.6s ✔ d47067d54097 Pull complete 1.2s ✔ b37f526cb6d4 Pull complete 1.4s ✔ e3e25c0883d4 Pull complete 6.6s ✔ 38326bc1340c Pull complete 7.6s ✔ dc916e282a43 Pull complete 6.6s ✔ 84388f622dc9 Pull complete 44.1s zhuzl@zhuzl-M9-PRO:/data/dockerRoot/apps/gitlab$ 然后,我们使用 docker compose up -d 启动服务,启动服务后,使用docker logs -f gitlab命令看看日志: zhuzl@zhuzl-M9-PRO:/data/dockerRoot/apps/gitlab$ docker logs -f gitlab Thank you for using GitLab Docker Image! Current version: gitlab-jh=17.3.1-jh.0 Configure GitLab for your system by editing /etc/gitlab/gitlab.rb file And restart this container to reload settings. To do it use docker exec: docker exec -it gitlab editor /etc/gitlab/gitlab.rb docker restart gitlab For a comprehensive list of configuration options please see the Omnibus GitLab readme https://gitlab.com/gitlab-org/omnibus-gitlab/blob/master/README.md If this container fails to start due to permission problems try to fix it by executing: docker exec -it gitlab update-permissions docker restart gitlab Cleaning stale PIDs & sockets It seems you are upgrading from 16.3.3-jh to 17.3.1. It is required to upgrade to the latest 16.11.x version first before proceeding. Please follow the upgrade documentation at https://docs.gitlab.com/ee/update/index.html#upgrading-to-a-new-major-version Thank you for using GitLab Docker Image! Current version: gitlab-jh=17.3.1-jh.0 Configure GitLab for your system by editing /etc/gitlab/gitlab.rb file And restart this container to reload settings. To do it use docker exec: docker exec -it gitlab editor /etc/gitlab/gitlab.rb docker restart gitlab For a comprehensive list of configuration options please see the Omnibus GitLab readme https://gitlab.com/gitlab-org/omnibus-gitlab/blob/master/README.md If this container fails to start due to permission problems try to fix it by executing: docker exec -it gitlab update-permissions docker restart gitlab Cleaning stale PIDs & sockets It seems you are upgrading from 16.3.3-jh to 17.3.1. It is required to upgrade to the latest 16.11.x version first before proceeding. Please follow the upgrade documentation at https://docs.gitlab.com/ee/update/index.html#upgrading-to-a-new-major-version Thank you for using GitLab Docker Image! Current version: gitlab-jh=17.3.1-jh.0 Configure GitLab for your system by editing /etc/gitlab/gitlab.rb file And restart this container to reload settings. To do it use docker exec: docker exec -it gitlab editor /etc/gitlab/gitlab.rb docker restart gitlab For a comprehensive list of configuration options please see the Omnibus GitLab readme https://gitlab.com/gitlab-org/omnibus-gitlab/blob/master/README.md If this container fails to start due to permission problems try to fix it by executing: docker exec -it gitlab update-permissions docker restart gitlab Cleaning stale PIDs & sockets It seems you are upgrading from 16.3.3-jh to 17.3.1. It is required to upgrade to the latest 16.11.x version first before proceeding. Please follow the upgrade documentation at https://docs.gitlab.com/ee/update/index.html#upgrading-to-a-new-major-version zhuzl@zhuzl-M9-PRO:/data/dockerRoot/apps/gitlab$ 从日志中的提示可知,系统检测到了,我们是从16.3.3-jh 升级到 17.3.1.但是要升级到17.x 必须先升级到 16.11.x 。于是将 docker-compose.yaml 文件中的image值改为:registry.gitlab.cn/omnibus/gitlab-jh:16.11.3,重新拉取,然后运行,观察日志:zhuzl@zhuzl-M9-PRO:/data/dockerRoot/apps/gitlab$ docker logs -f gitlab Thank you for using GitLab Docker Image! Current version: gitlab-jh=16.11.3-jh.0 Configure GitLab for your system by editing /etc/gitlab/gitlab.rb file And restart this container to reload settings. To do it use docker exec: docker exec -it gitlab editor /etc/gitlab/gitlab.rb docker restart gitlab For a comprehensive list of configuration options please see the Omnibus GitLab readme https://gitlab.com/gitlab-org/omnibus-gitlab/blob/master/README.md If this container fails to start due to permission problems try to fix it by executing: docker exec -it gitlab update-permissions docker restart gitlab Cleaning stale PIDs & sockets It seems you are upgrading from 16.3.3-jh to 16.11.3. It is required to upgrade to the latest 16.7.x version first before proceeding. Please follow the upgrade documentation at https://docs.gitlab.com/ee/update/#upgrade-paths Thank you for using GitLab Docker Image! Current version: gitlab-jh=16.11.3-jh.0 Configure GitLab for your system by editing /etc/gitlab/gitlab.rb file And restart this container to reload settings. To do it use docker exec: docker exec -it gitlab editor /etc/gitlab/gitlab.rb docker restart gitlab For a comprehensive list of configuration options please see the Omnibus GitLab readme https://gitlab.com/gitlab-org/omnibus-gitlab/blob/master/README.md If this container fails to start due to permission problems try to fix it by executing: docker exec -it gitlab update-permissions docker restart gitlab Cleaning stale PIDs & sockets It seems you are upgrading from 16.3.3-jh to 16.11.3. It is required to upgrade to the latest 16.7.x version first before proceeding. Please follow the upgrade documentation at https://docs.gitlab.com/ee/update/#upgrade-paths zhuzl@zhuzl-M9-PRO:/data/dockerRoot/apps/gitlab$根据日志提示,要升级到16.11.3 的话,需要先升级到 16.7.x , 于是我们继续修改docker-compose.yaml 中的 image 为:registry.gitlab.cn/omnibus/gitlab-jh:16.11.3,继续拉取镜像并启动服务,经过漫长等待后,登录gitlab,查看版本发现第一步升级到16.7.x已完成:然后继续升级到16.11.x,修改docker 镜像后,重启服务,经过漫长的等待后,成功升级到16.11.3:然后我们将image 修改为:registry.gitlab.cn/omnibus/gitlab-jh:latest`,重新启动服务,登录后,已经升级到最新的 17.3.1 版本:升级完成后,查看容器日志,发现大量类似这样的错误日志输出:2024-09-11_04:05:55.21146 ts=2024-09-11T04:05:55.211Z caller=main.go:181 level=info msg="Starting Alertmanager" version="(version=0.27.0, branch=master, revision=0aa3c2aad14cff039931923ab16b26b7481783b5)" 2024-09-11_04:05:55.21148 ts=2024-09-11T04:05:55.211Z caller=main.go:182 level=info build_context="(go=go1.22.5, platform=linux/amd64, user=GitLab-Omnibus, date=, tags=unknown)" 2024-09-11_04:05:55.21183 ts=2024-09-11T04:05:55.211Z caller=cluster.go:179 level=warn component=cluster err="couldn't deduce an advertise address: no private IP found, explicit advertise addr not provided" 2024-09-11_04:05:55.21286 ts=2024-09-11T04:05:55.212Z caller=main.go:221 level=error msg="unable to initialize gossip mesh" err="create memberlist: Failed to get final advertise address: No private IP address found, and explicit IP not provided"简单搜了下,找到这个网址:https://gitlab.com/gitlab-org/omnibus-gitlab/-/issues/4556按照提示,配置信息添加如下信息即可:alertmanager['enable'] = false。由此,我进一步调整 docker-compose.yml,文件,最终的内容如下:services: gitlab: image: 'registry.gitlab.cn/omnibus/gitlab-jh:latest' # image: 'registry.gitlab.cn/omnibus/gitlab-jh:16.11.3' # image: 'registry.gitlab.cn/omnibus/gitlab-jh:16.7.7' restart: always container_name: gitlab hostname: 'git.work.zhuzhilong.com' environment: GITLAB_OMNIBUS_CONFIG: | external_url 'http://git.work.zhuzhilong.com' # Add any other gitlab.rb configuration here, each on its own line alertmanager['enable']=false networks: - net-zzl ports: - '8007:80' - '2223:22' volumes: - './config:/etc/gitlab' - './logs:/var/log/gitlab' - './data:/var/opt/gitlab' - ../hosts:/etc/hosts shm_size: '256m' networks: net-zzl: name: bridge_zzl external: true

记一次升级gitlab的记录(16.3.3~17.3.1) 开发服务器的 gitlab 使用 docker 搭建,docker-compose.yml 文件内容如下:services: gitlab: image: 'registry.gitlab.cn/omnibus/gitlab-jh:latest' restart: always container_name: gitlab hostname: 'git.work.zhuzhilong.com' environment: GITLAB_OMNIBUS_CONFIG: | external_url 'http://git.work.zhuzhilong.com' # Add any other gitlab.rb configuration here, each on its own line networks: - net-zzl ports: - '8007:80' - '2223:22' volumes: - './config:/etc/gitlab' - './logs:/var/log/gitlab' - './data:/var/opt/gitlab' - ../hosts:/etc/hosts shm_size: '256m' networks: net-zzl: name: bridge_zzl external: true由于该 gitlab 搭建后一直未更新过,今天尝试个升级,升级前,我们先看看系统环境信息:由于 gitlab 用的镜像是 registry.gitlab.cn/omnibus/gitlab-jh:latest,我先尝试直接拉取一下最新的镜像看是否可以直接升级,拉取命令:docker compose pull,拉取过程如下:zhuzl@zhuzl-M9-PRO:/data/dockerRoot/apps/gitlab$ docker compose pull [+] Pulling 10/10 ✔ gitlab 9 layers [⣿⣿⣿⣿⣿⣿⣿⣿⣿] 0B/0B Pulled 62.1s ✔ 857cc8cb19c0 Already exists 0.0s ✔ d388127601d7 Pull complete 1.0s ✔ c973ce60899e Pull complete 1.6s ✔ d47067d54097 Pull complete 1.2s ✔ b37f526cb6d4 Pull complete 1.4s ✔ e3e25c0883d4 Pull complete 6.6s ✔ 38326bc1340c Pull complete 7.6s ✔ dc916e282a43 Pull complete 6.6s ✔ 84388f622dc9 Pull complete 44.1s zhuzl@zhuzl-M9-PRO:/data/dockerRoot/apps/gitlab$ 然后,我们使用 docker compose up -d 启动服务,启动服务后,使用docker logs -f gitlab命令看看日志: zhuzl@zhuzl-M9-PRO:/data/dockerRoot/apps/gitlab$ docker logs -f gitlab Thank you for using GitLab Docker Image! Current version: gitlab-jh=17.3.1-jh.0 Configure GitLab for your system by editing /etc/gitlab/gitlab.rb file And restart this container to reload settings. To do it use docker exec: docker exec -it gitlab editor /etc/gitlab/gitlab.rb docker restart gitlab For a comprehensive list of configuration options please see the Omnibus GitLab readme https://gitlab.com/gitlab-org/omnibus-gitlab/blob/master/README.md If this container fails to start due to permission problems try to fix it by executing: docker exec -it gitlab update-permissions docker restart gitlab Cleaning stale PIDs & sockets It seems you are upgrading from 16.3.3-jh to 17.3.1. It is required to upgrade to the latest 16.11.x version first before proceeding. Please follow the upgrade documentation at https://docs.gitlab.com/ee/update/index.html#upgrading-to-a-new-major-version Thank you for using GitLab Docker Image! Current version: gitlab-jh=17.3.1-jh.0 Configure GitLab for your system by editing /etc/gitlab/gitlab.rb file And restart this container to reload settings. To do it use docker exec: docker exec -it gitlab editor /etc/gitlab/gitlab.rb docker restart gitlab For a comprehensive list of configuration options please see the Omnibus GitLab readme https://gitlab.com/gitlab-org/omnibus-gitlab/blob/master/README.md If this container fails to start due to permission problems try to fix it by executing: docker exec -it gitlab update-permissions docker restart gitlab Cleaning stale PIDs & sockets It seems you are upgrading from 16.3.3-jh to 17.3.1. It is required to upgrade to the latest 16.11.x version first before proceeding. Please follow the upgrade documentation at https://docs.gitlab.com/ee/update/index.html#upgrading-to-a-new-major-version Thank you for using GitLab Docker Image! Current version: gitlab-jh=17.3.1-jh.0 Configure GitLab for your system by editing /etc/gitlab/gitlab.rb file And restart this container to reload settings. To do it use docker exec: docker exec -it gitlab editor /etc/gitlab/gitlab.rb docker restart gitlab For a comprehensive list of configuration options please see the Omnibus GitLab readme https://gitlab.com/gitlab-org/omnibus-gitlab/blob/master/README.md If this container fails to start due to permission problems try to fix it by executing: docker exec -it gitlab update-permissions docker restart gitlab Cleaning stale PIDs & sockets It seems you are upgrading from 16.3.3-jh to 17.3.1. It is required to upgrade to the latest 16.11.x version first before proceeding. Please follow the upgrade documentation at https://docs.gitlab.com/ee/update/index.html#upgrading-to-a-new-major-version zhuzl@zhuzl-M9-PRO:/data/dockerRoot/apps/gitlab$ 从日志中的提示可知,系统检测到了,我们是从16.3.3-jh 升级到 17.3.1.但是要升级到17.x 必须先升级到 16.11.x 。于是将 docker-compose.yaml 文件中的image值改为:registry.gitlab.cn/omnibus/gitlab-jh:16.11.3,重新拉取,然后运行,观察日志:zhuzl@zhuzl-M9-PRO:/data/dockerRoot/apps/gitlab$ docker logs -f gitlab Thank you for using GitLab Docker Image! Current version: gitlab-jh=16.11.3-jh.0 Configure GitLab for your system by editing /etc/gitlab/gitlab.rb file And restart this container to reload settings. To do it use docker exec: docker exec -it gitlab editor /etc/gitlab/gitlab.rb docker restart gitlab For a comprehensive list of configuration options please see the Omnibus GitLab readme https://gitlab.com/gitlab-org/omnibus-gitlab/blob/master/README.md If this container fails to start due to permission problems try to fix it by executing: docker exec -it gitlab update-permissions docker restart gitlab Cleaning stale PIDs & sockets It seems you are upgrading from 16.3.3-jh to 16.11.3. It is required to upgrade to the latest 16.7.x version first before proceeding. Please follow the upgrade documentation at https://docs.gitlab.com/ee/update/#upgrade-paths Thank you for using GitLab Docker Image! Current version: gitlab-jh=16.11.3-jh.0 Configure GitLab for your system by editing /etc/gitlab/gitlab.rb file And restart this container to reload settings. To do it use docker exec: docker exec -it gitlab editor /etc/gitlab/gitlab.rb docker restart gitlab For a comprehensive list of configuration options please see the Omnibus GitLab readme https://gitlab.com/gitlab-org/omnibus-gitlab/blob/master/README.md If this container fails to start due to permission problems try to fix it by executing: docker exec -it gitlab update-permissions docker restart gitlab Cleaning stale PIDs & sockets It seems you are upgrading from 16.3.3-jh to 16.11.3. It is required to upgrade to the latest 16.7.x version first before proceeding. Please follow the upgrade documentation at https://docs.gitlab.com/ee/update/#upgrade-paths zhuzl@zhuzl-M9-PRO:/data/dockerRoot/apps/gitlab$根据日志提示,要升级到16.11.3 的话,需要先升级到 16.7.x , 于是我们继续修改docker-compose.yaml 中的 image 为:registry.gitlab.cn/omnibus/gitlab-jh:16.11.3,继续拉取镜像并启动服务,经过漫长等待后,登录gitlab,查看版本发现第一步升级到16.7.x已完成:然后继续升级到16.11.x,修改docker 镜像后,重启服务,经过漫长的等待后,成功升级到16.11.3:然后我们将image 修改为:registry.gitlab.cn/omnibus/gitlab-jh:latest`,重新启动服务,登录后,已经升级到最新的 17.3.1 版本:升级完成后,查看容器日志,发现大量类似这样的错误日志输出:2024-09-11_04:05:55.21146 ts=2024-09-11T04:05:55.211Z caller=main.go:181 level=info msg="Starting Alertmanager" version="(version=0.27.0, branch=master, revision=0aa3c2aad14cff039931923ab16b26b7481783b5)" 2024-09-11_04:05:55.21148 ts=2024-09-11T04:05:55.211Z caller=main.go:182 level=info build_context="(go=go1.22.5, platform=linux/amd64, user=GitLab-Omnibus, date=, tags=unknown)" 2024-09-11_04:05:55.21183 ts=2024-09-11T04:05:55.211Z caller=cluster.go:179 level=warn component=cluster err="couldn't deduce an advertise address: no private IP found, explicit advertise addr not provided" 2024-09-11_04:05:55.21286 ts=2024-09-11T04:05:55.212Z caller=main.go:221 level=error msg="unable to initialize gossip mesh" err="create memberlist: Failed to get final advertise address: No private IP address found, and explicit IP not provided"简单搜了下,找到这个网址:https://gitlab.com/gitlab-org/omnibus-gitlab/-/issues/4556按照提示,配置信息添加如下信息即可:alertmanager['enable'] = false。由此,我进一步调整 docker-compose.yml,文件,最终的内容如下:services: gitlab: image: 'registry.gitlab.cn/omnibus/gitlab-jh:latest' # image: 'registry.gitlab.cn/omnibus/gitlab-jh:16.11.3' # image: 'registry.gitlab.cn/omnibus/gitlab-jh:16.7.7' restart: always container_name: gitlab hostname: 'git.work.zhuzhilong.com' environment: GITLAB_OMNIBUS_CONFIG: | external_url 'http://git.work.zhuzhilong.com' # Add any other gitlab.rb configuration here, each on its own line alertmanager['enable']=false networks: - net-zzl ports: - '8007:80' - '2223:22' volumes: - './config:/etc/gitlab' - './logs:/var/log/gitlab' - './data:/var/opt/gitlab' - ../hosts:/etc/hosts shm_size: '256m' networks: net-zzl: name: bridge_zzl external: true -

一款超级好用的端口映射工具:rinetd 2014年的时候参与江西交通厅的项目,由于厅里只有一台跳板机可以访问省政府的在线办事内网服务,而内网机房也只有一台服务器能访问跳板机,而我们的项目又需要访问相关服务,然后就在内网的服务器上部署了个端口映射的软件,当时一直记得这款软件超级简单易用。近期一台开发服务器上的网络环境有同类的需求,但是时隔近10年,连名字都忘了,还好平常有记录工作日志的习惯,从10年前的工作日志里找到了当时的记录,就是它了: rinetd软件介绍rinetd 是一个简单易用的端口映射/转发/重定向工具。它通常用于将网络流量从一个端口转发到另一个端口,或者从一个IP地址转发到另一个IP地址。rinetd特别适用于那些需要将服务请求从一个网络地址或端口转发到另一个不同地址或端口的情况。rinetd的特点:简单性:配置简单,通过一个配置文件就可以完成设置。轻量级:rinetd自身占用的系统资源非常少。支持IPv4:它支持IPv4网络连接的重定向。安全性:可以设置允许哪些IP地址进行转发,从而提供一定程度的网络访问控制。软件使用安装rinetd:在大多数 Linux 发行版中,rinetd可以通过包管理器安装。例如,在基于Debian的系统(如Ubuntu)中,可以使用以下命令安装:sudo apt-get update sudo apt-get install rinetd以下是源码安装代码:windows: rinetd-win.ziplinux: rinetd.tar.gz配置rinetd:rinetd 的配置文件位于 /etc/rinetd.conf。以下是配置文件的一个基本示例:# # this is the configuration file for rinetd, the internet redirection server # # you may specify global allow and deny rules here # only ip addresses are matched, hostnames cannot be specified here # the wildcards you may use are * and ? # # allow 192.168.2.* # deny 192.168.2.1? # # forwarding rules come here # # you may specify allow and deny rules after a specific forwarding rule # to apply to only that forwarding rule # # bindadress bindport connectaddress connectport # for rocketMQ # 将所有发往本机18090端口的连接重定向到192.168.150.250的18080端口。 0.0.0.0 18090 192.168.150.250 18080 0.0.0.0 18091 192.168.150.250 18081 0.0.0.0 18092 192.168.150.250 18082 #0.0.0.0 18093 192.168.150.250 18083 0.0.0.0 18076 192.168.150.250 19876 # logging information logfile /var/log/rinetd.log # uncomment the following line if you want web-server style logfile format # logcommon日常运维命令# 启动服务 sudo systemctl restart rinetd # 重启服务 sudo systemctl restart rinetd # 查看运行状态 sudo systemctl status rinetd # 设为开机自启动 sudo systemctl enable rinetd特别说明:确保系统的防火墙规则允许 rinetd 进行必要的网络通信。

一款超级好用的端口映射工具:rinetd 2014年的时候参与江西交通厅的项目,由于厅里只有一台跳板机可以访问省政府的在线办事内网服务,而内网机房也只有一台服务器能访问跳板机,而我们的项目又需要访问相关服务,然后就在内网的服务器上部署了个端口映射的软件,当时一直记得这款软件超级简单易用。近期一台开发服务器上的网络环境有同类的需求,但是时隔近10年,连名字都忘了,还好平常有记录工作日志的习惯,从10年前的工作日志里找到了当时的记录,就是它了: rinetd软件介绍rinetd 是一个简单易用的端口映射/转发/重定向工具。它通常用于将网络流量从一个端口转发到另一个端口,或者从一个IP地址转发到另一个IP地址。rinetd特别适用于那些需要将服务请求从一个网络地址或端口转发到另一个不同地址或端口的情况。rinetd的特点:简单性:配置简单,通过一个配置文件就可以完成设置。轻量级:rinetd自身占用的系统资源非常少。支持IPv4:它支持IPv4网络连接的重定向。安全性:可以设置允许哪些IP地址进行转发,从而提供一定程度的网络访问控制。软件使用安装rinetd:在大多数 Linux 发行版中,rinetd可以通过包管理器安装。例如,在基于Debian的系统(如Ubuntu)中,可以使用以下命令安装:sudo apt-get update sudo apt-get install rinetd以下是源码安装代码:windows: rinetd-win.ziplinux: rinetd.tar.gz配置rinetd:rinetd 的配置文件位于 /etc/rinetd.conf。以下是配置文件的一个基本示例:# # this is the configuration file for rinetd, the internet redirection server # # you may specify global allow and deny rules here # only ip addresses are matched, hostnames cannot be specified here # the wildcards you may use are * and ? # # allow 192.168.2.* # deny 192.168.2.1? # # forwarding rules come here # # you may specify allow and deny rules after a specific forwarding rule # to apply to only that forwarding rule # # bindadress bindport connectaddress connectport # for rocketMQ # 将所有发往本机18090端口的连接重定向到192.168.150.250的18080端口。 0.0.0.0 18090 192.168.150.250 18080 0.0.0.0 18091 192.168.150.250 18081 0.0.0.0 18092 192.168.150.250 18082 #0.0.0.0 18093 192.168.150.250 18083 0.0.0.0 18076 192.168.150.250 19876 # logging information logfile /var/log/rinetd.log # uncomment the following line if you want web-server style logfile format # logcommon日常运维命令# 启动服务 sudo systemctl restart rinetd # 重启服务 sudo systemctl restart rinetd # 查看运行状态 sudo systemctl status rinetd # 设为开机自启动 sudo systemctl enable rinetd特别说明:确保系统的防火墙规则允许 rinetd 进行必要的网络通信。 -

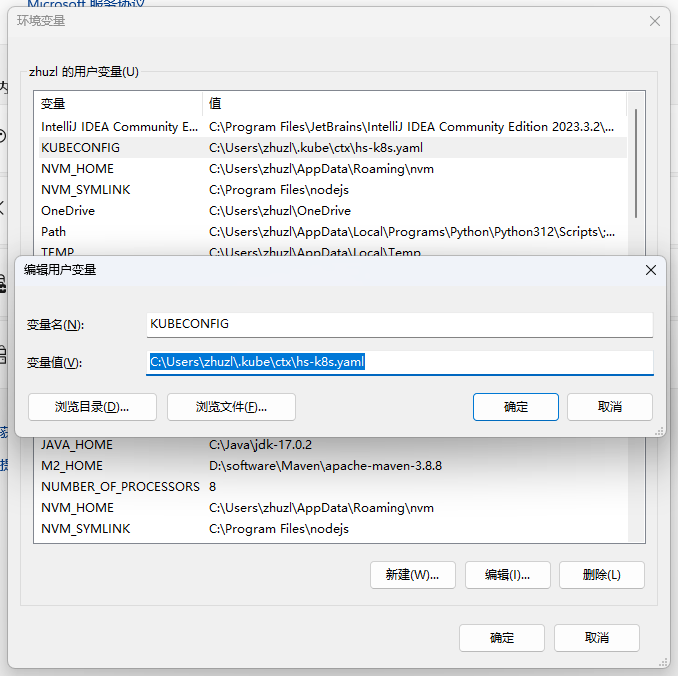

K8S简单使用记录——使用kubectl 查看应用日志及简单管理 背景说明近期公司的 devIstio 环境已整体迁移到火山引擎,该环境SRE主要采用K8S进行运维,迁移后相关的应用日志当前只能使用 kubectl 进行查看,所以就根据SRE提供的指导材料做了些了解,当前对K8S没有做深入了解,没有全局观,只能从一个开发者角度做到浅尝辄止。环境准备安装 kubectl开发机用的 Windows 11,可使用下面任意一个方法进行安装:通过 PowerShell 中安装:& $([scriptblock]::Create((New-Object Net.WebClient).DownloadString('https://raw.githubusercontent.com/coreweave/kubernetes-cloud/master/getting-started/k8ctl_setup.ps1')))通过 Chocolatey 安装:choco install kubernetes-cli通过 Scoop 安装:scoop install kubectl本地配置访问 K8S 集群安装 kubectlho 后,将 SRE 提供的 kubeconfig 文件,复制到系统用户目录下的.kube/cotx/目录(缺省为:%USERPROFILE%.kubeconfig)。如果不是在缺省目录,请在系统的环境变量中添加 KUBECONFIG 变量,如下图所示:开始使用基本使用获取所有 podkubectl get pods -A示例效果:$ kubectl get pods -A NAMESPACE NAME READY STATUS RESTARTS AGE console blsc-ui-594555ff77-z49ln 1/1 Running 0 32d console consent-forward-8499796656-qr9mc 1/1 Running 0 49d console consent-login-8678cdb6f4-2wjh8 1/1 Running 0 49d console console-api-entry-76d7d8bbc-v7kcm 1/1 Running 0 17d console console-batch-f88bf9dd6-62fwm 1/1 Running 0 49d console console-biz-84f458cfcd-v6pjj 1/1 Running 0 2d21h console console-blsc-kbs-7b66548df6-8lwh7 1/1 Running 0 49d console console-cluster-5ddb65ff54-2k4lf 1/1 Running 0 49d console console-config-server-5d6cf99b9b-wt7s9 1/1 Running 0 46h console console-data-sync-556dc4dc8-z2d9t 1/1 Running 0 18d console console-dev-guide-6bf4bcb79c-48crp 1/1 Running 0 6d5h console console-gateway-fbbf58dd-rcw8f 1/1 Running 0 32d console console-kbs-67cc85848f-7tg9h 1/1 Running 0 49d console console-mobile-blsc-ui-846f5f9795-jbhwc 1/1 Running 0 48d console console-mobile-server-8555b7b5cf-x9l26 1/1 Running 0 49d console console-mobile-ui-79df845ffc-445s9 1/1 Running 0 32d console console-notice-5b8dc5fd68-vmtf7 1/1 Running 0 39d console console-order-66b54dcf8-h4rv2 0/1 CrashLoopBackOff 536 (2m59s ago) 46h console console-prototypes-68c984cd88-mpzjb 1/1 Running 0 38d console console-singleton-64b7d87877-qp5x4 1/1 Running 0 28d console console-ui-56c8746566-8bbf5 1/1 Running 0 3d2h console dmc-api-doc-7cf59d7f5d-gjxbs 1/1 Running 0 49d console dmc-core-5dcb7dc65b-fb2gv 1/1 Running 0 49d console dmc-gateway-59fc6b767f-hzvgf 1/1 Running 0 49d console dmc-magic-boot-5d89987468-r5m7r 1/1 Running 0 49d console dmc-magic-boot-naive-8669fdc878-td7n8 1/1 Running 0 49d console dmc-screen-5f5cb57d89-cfskh 1/1 Running 0 49d console dmc-show-kbs-57b67545f5-4cc9k 1/1 Running 0 126m console dmc-ui-d8f5bd4b-fhmb7 1/1 Running 0 49d console dmc-worktime-backend-5dc69ccc6b-qf97p 1/1 Running 0 49d console dmc-worktime-ui-7db6df7469-d4fxt 1/1 Running 0 49d kube-system cello-7qv8s 2/2 Running 2 (49d ago) 111d kube-system cello-b8d6p 2/2 Running 3 (49d ago) 111d kube-system cello-mrch7 2/2 Running 2 (49d ago) 111d kube-system cello-pp5wz 2/2 Running 2 (49d ago) 111d kube-system coredns-58cd886448-clswr 1/1 Running 0 49d kube-system coredns-58cd886448-vlrwd 1/1 Running 0 49d kube-system metrics-server-7769c76b67-rfnvm 1/1 Running 0 49d sup sup-db-query-fd7675849-sjp8g 1/1 Running 0 49d sup sup-mq-5bc8ccf978-5x5mc 1/1 Running 0 49d sup sup-nginx-8575c78879-27gxs 1/1 Running 0 31d sup sup-oauth2-85ccd96db7-76rtw 1/1 Running 0 49d 查看日志kubectl logs [podname] -f示例效果$ kubectl logs dmc-ui-d8f5bd4b-fhmb7 -f /docker-entrypoint.sh: /docker-entrypoint.d/ is not empty, will attempt to perform configuration /docker-entrypoint.sh: Looking for shell scripts in /docker-entrypoint.d/ /docker-entrypoint.sh: Launching /docker-entrypoint.d/10-listen-on-ipv6-by-default.sh 10-listen-on-ipv6-by-default.sh: info: IPv6 listen already enabled /docker-entrypoint.sh: Launching /docker-entrypoint.d/20-envsubst-on-templates.sh /docker-entrypoint.sh: Launching /docker-entrypoint.d/30-tune-worker-processes.sh /docker-entrypoint.sh: Configuration complete; ready for start up 2024/06/24 03:06:27 [notice] 1#1: using the "epoll" event method 2024/06/24 03:06:27 [notice] 1#1: nginx/1.22.1 2024/06/24 03:06:27 [notice] 1#1: built by gcc 11.2.1 20220219 (Alpine 11.2.1_git20220219) 2024/06/24 03:06:27 [notice] 1#1: OS: Linux 5.10.135-6-velinux1u1-amd64 2024/06/24 03:06:27 [notice] 1#1: getrlimit(RLIMIT_NOFILE): 1048576:1048576 2024/06/24 03:06:27 [notice] 1#1: start worker processes 2024/06/24 03:06:27 [notice] 1#1: start worker process 22 2024/06/24 03:06:27 [notice] 1#1: start worker process 23 2024/06/24 03:06:27 [notice] 1#1: start worker process 24 2024/06/24 03:06:27 [notice] 1#1: start worker process 25 2024/06/24 03:06:27 [notice] 1#1: start worker process 26 2024/06/24 03:06:27 [notice] 1#1: start worker process 27 2024/06/24 03:06:27 [notice] 1#1: start worker process 28 2024/06/24 03:06:27 [notice] 1#1: start worker process 29 查看最近 10 行日志kubectl logs --tail 1000 -f [podname]示例效果$ kubectl logs --tail 10 dmc-ui-d8f5bd4b-fhmb7 172.17.16.38 - - [26/Jul/2024:00:50:52 +0000] "GET /favicon.ico HTTP/1.0" 200 16958 "-" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/96.0.4664.110 Safari/537.36" "-" 172.17.16.38 - - [31/Jul/2024:17:09:02 +0000] "GET / HTTP/1.0" 200 625 "-" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/96.0.4664.110 Safari/537.36" "-" 172.17.16.38 - - [31/Jul/2024:17:09:02 +0000] "GET / HTTP/1.0" 200 625 "-" "Mozilla/5.0 (Macintosh; Intel Mac OS X 11_0_0) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/87.0.4280.88 Safari/537.36" "-" 172.17.16.38 - - [31/Jul/2024:17:09:02 +0000] "GET /assets/vendor.9901ae39.js HTTP/1.0" 200 1395988 "https://dmc-ui.console.dev.paratera.com/" "Mozilla/5.0 (Macintosh; Intel Mac OS X 11_0_0) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/87.0.4280.88 Safari/537.36" "-" 172.17.16.38 - - [31/Jul/2024:17:09:02 +0000] "GET /assets/index.2058bab1.js HTTP/1.0" 200 100982 "https://dmc-ui.console.dev.paratera.com/" "Mozilla/5.0 (Macintosh; Intel Mac OS X 11_0_0) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/87.0.4280.88 Safari/537.36" "-" 172.17.16.38 - - [31/Jul/2024:17:09:07 +0000] "GET /favicon.ico HTTP/1.0" 200 16958 "-" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/96.0.4664.110 Safari/537.36" "-" 172.17.16.38 - - [01/Aug/2024:11:08:22 +0000] "GET / HTTP/1.0" 200 625 "-" "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/49.0.2623.112 Safari/537.36" "-" 172.17.16.38 - - [01/Aug/2024:11:08:28 +0000] "GET /favicon.ico HTTP/1.0" 200 16958 "-" "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/49.0.2623.112 Safari/537.36" "-" 172.17.16.38 - - [01/Aug/2024:11:08:28 +0000] "GET /assets/index.2058bab1.js HTTP/1.0" 200 100982 "-" "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/49.0.2623.112 Safari/537.36" "-" 172.17.16.38 - - [02/Aug/2024:01:35:56 +0000] "GET / HTTP/1.0" 200 625 "-" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/96.0.4664.110 Safari/537.36" "-"-n 指定命名空间$ kubectl get pods -n aps NAME READY STATUS RESTARTS AGE aps-api-entry-9c74586db-7djmp 1/1 Running 0 11d aps-config-server-544bb7677c-nwr6x 1/1 Running 0 5d22h aps-data-etl-649b79cb79-mbwr7 1/1 Running 0 46m重启应用$ kubectl rollout restart deployment aps-data-etl -n aps deployment.apps/aps-data-etl restarted

K8S简单使用记录——使用kubectl 查看应用日志及简单管理 背景说明近期公司的 devIstio 环境已整体迁移到火山引擎,该环境SRE主要采用K8S进行运维,迁移后相关的应用日志当前只能使用 kubectl 进行查看,所以就根据SRE提供的指导材料做了些了解,当前对K8S没有做深入了解,没有全局观,只能从一个开发者角度做到浅尝辄止。环境准备安装 kubectl开发机用的 Windows 11,可使用下面任意一个方法进行安装:通过 PowerShell 中安装:& $([scriptblock]::Create((New-Object Net.WebClient).DownloadString('https://raw.githubusercontent.com/coreweave/kubernetes-cloud/master/getting-started/k8ctl_setup.ps1')))通过 Chocolatey 安装:choco install kubernetes-cli通过 Scoop 安装:scoop install kubectl本地配置访问 K8S 集群安装 kubectlho 后,将 SRE 提供的 kubeconfig 文件,复制到系统用户目录下的.kube/cotx/目录(缺省为:%USERPROFILE%.kubeconfig)。如果不是在缺省目录,请在系统的环境变量中添加 KUBECONFIG 变量,如下图所示:开始使用基本使用获取所有 podkubectl get pods -A示例效果:$ kubectl get pods -A NAMESPACE NAME READY STATUS RESTARTS AGE console blsc-ui-594555ff77-z49ln 1/1 Running 0 32d console consent-forward-8499796656-qr9mc 1/1 Running 0 49d console consent-login-8678cdb6f4-2wjh8 1/1 Running 0 49d console console-api-entry-76d7d8bbc-v7kcm 1/1 Running 0 17d console console-batch-f88bf9dd6-62fwm 1/1 Running 0 49d console console-biz-84f458cfcd-v6pjj 1/1 Running 0 2d21h console console-blsc-kbs-7b66548df6-8lwh7 1/1 Running 0 49d console console-cluster-5ddb65ff54-2k4lf 1/1 Running 0 49d console console-config-server-5d6cf99b9b-wt7s9 1/1 Running 0 46h console console-data-sync-556dc4dc8-z2d9t 1/1 Running 0 18d console console-dev-guide-6bf4bcb79c-48crp 1/1 Running 0 6d5h console console-gateway-fbbf58dd-rcw8f 1/1 Running 0 32d console console-kbs-67cc85848f-7tg9h 1/1 Running 0 49d console console-mobile-blsc-ui-846f5f9795-jbhwc 1/1 Running 0 48d console console-mobile-server-8555b7b5cf-x9l26 1/1 Running 0 49d console console-mobile-ui-79df845ffc-445s9 1/1 Running 0 32d console console-notice-5b8dc5fd68-vmtf7 1/1 Running 0 39d console console-order-66b54dcf8-h4rv2 0/1 CrashLoopBackOff 536 (2m59s ago) 46h console console-prototypes-68c984cd88-mpzjb 1/1 Running 0 38d console console-singleton-64b7d87877-qp5x4 1/1 Running 0 28d console console-ui-56c8746566-8bbf5 1/1 Running 0 3d2h console dmc-api-doc-7cf59d7f5d-gjxbs 1/1 Running 0 49d console dmc-core-5dcb7dc65b-fb2gv 1/1 Running 0 49d console dmc-gateway-59fc6b767f-hzvgf 1/1 Running 0 49d console dmc-magic-boot-5d89987468-r5m7r 1/1 Running 0 49d console dmc-magic-boot-naive-8669fdc878-td7n8 1/1 Running 0 49d console dmc-screen-5f5cb57d89-cfskh 1/1 Running 0 49d console dmc-show-kbs-57b67545f5-4cc9k 1/1 Running 0 126m console dmc-ui-d8f5bd4b-fhmb7 1/1 Running 0 49d console dmc-worktime-backend-5dc69ccc6b-qf97p 1/1 Running 0 49d console dmc-worktime-ui-7db6df7469-d4fxt 1/1 Running 0 49d kube-system cello-7qv8s 2/2 Running 2 (49d ago) 111d kube-system cello-b8d6p 2/2 Running 3 (49d ago) 111d kube-system cello-mrch7 2/2 Running 2 (49d ago) 111d kube-system cello-pp5wz 2/2 Running 2 (49d ago) 111d kube-system coredns-58cd886448-clswr 1/1 Running 0 49d kube-system coredns-58cd886448-vlrwd 1/1 Running 0 49d kube-system metrics-server-7769c76b67-rfnvm 1/1 Running 0 49d sup sup-db-query-fd7675849-sjp8g 1/1 Running 0 49d sup sup-mq-5bc8ccf978-5x5mc 1/1 Running 0 49d sup sup-nginx-8575c78879-27gxs 1/1 Running 0 31d sup sup-oauth2-85ccd96db7-76rtw 1/1 Running 0 49d 查看日志kubectl logs [podname] -f示例效果$ kubectl logs dmc-ui-d8f5bd4b-fhmb7 -f /docker-entrypoint.sh: /docker-entrypoint.d/ is not empty, will attempt to perform configuration /docker-entrypoint.sh: Looking for shell scripts in /docker-entrypoint.d/ /docker-entrypoint.sh: Launching /docker-entrypoint.d/10-listen-on-ipv6-by-default.sh 10-listen-on-ipv6-by-default.sh: info: IPv6 listen already enabled /docker-entrypoint.sh: Launching /docker-entrypoint.d/20-envsubst-on-templates.sh /docker-entrypoint.sh: Launching /docker-entrypoint.d/30-tune-worker-processes.sh /docker-entrypoint.sh: Configuration complete; ready for start up 2024/06/24 03:06:27 [notice] 1#1: using the "epoll" event method 2024/06/24 03:06:27 [notice] 1#1: nginx/1.22.1 2024/06/24 03:06:27 [notice] 1#1: built by gcc 11.2.1 20220219 (Alpine 11.2.1_git20220219) 2024/06/24 03:06:27 [notice] 1#1: OS: Linux 5.10.135-6-velinux1u1-amd64 2024/06/24 03:06:27 [notice] 1#1: getrlimit(RLIMIT_NOFILE): 1048576:1048576 2024/06/24 03:06:27 [notice] 1#1: start worker processes 2024/06/24 03:06:27 [notice] 1#1: start worker process 22 2024/06/24 03:06:27 [notice] 1#1: start worker process 23 2024/06/24 03:06:27 [notice] 1#1: start worker process 24 2024/06/24 03:06:27 [notice] 1#1: start worker process 25 2024/06/24 03:06:27 [notice] 1#1: start worker process 26 2024/06/24 03:06:27 [notice] 1#1: start worker process 27 2024/06/24 03:06:27 [notice] 1#1: start worker process 28 2024/06/24 03:06:27 [notice] 1#1: start worker process 29 查看最近 10 行日志kubectl logs --tail 1000 -f [podname]示例效果$ kubectl logs --tail 10 dmc-ui-d8f5bd4b-fhmb7 172.17.16.38 - - [26/Jul/2024:00:50:52 +0000] "GET /favicon.ico HTTP/1.0" 200 16958 "-" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/96.0.4664.110 Safari/537.36" "-" 172.17.16.38 - - [31/Jul/2024:17:09:02 +0000] "GET / HTTP/1.0" 200 625 "-" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/96.0.4664.110 Safari/537.36" "-" 172.17.16.38 - - [31/Jul/2024:17:09:02 +0000] "GET / HTTP/1.0" 200 625 "-" "Mozilla/5.0 (Macintosh; Intel Mac OS X 11_0_0) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/87.0.4280.88 Safari/537.36" "-" 172.17.16.38 - - [31/Jul/2024:17:09:02 +0000] "GET /assets/vendor.9901ae39.js HTTP/1.0" 200 1395988 "https://dmc-ui.console.dev.paratera.com/" "Mozilla/5.0 (Macintosh; Intel Mac OS X 11_0_0) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/87.0.4280.88 Safari/537.36" "-" 172.17.16.38 - - [31/Jul/2024:17:09:02 +0000] "GET /assets/index.2058bab1.js HTTP/1.0" 200 100982 "https://dmc-ui.console.dev.paratera.com/" "Mozilla/5.0 (Macintosh; Intel Mac OS X 11_0_0) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/87.0.4280.88 Safari/537.36" "-" 172.17.16.38 - - [31/Jul/2024:17:09:07 +0000] "GET /favicon.ico HTTP/1.0" 200 16958 "-" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/96.0.4664.110 Safari/537.36" "-" 172.17.16.38 - - [01/Aug/2024:11:08:22 +0000] "GET / HTTP/1.0" 200 625 "-" "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/49.0.2623.112 Safari/537.36" "-" 172.17.16.38 - - [01/Aug/2024:11:08:28 +0000] "GET /favicon.ico HTTP/1.0" 200 16958 "-" "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/49.0.2623.112 Safari/537.36" "-" 172.17.16.38 - - [01/Aug/2024:11:08:28 +0000] "GET /assets/index.2058bab1.js HTTP/1.0" 200 100982 "-" "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/49.0.2623.112 Safari/537.36" "-" 172.17.16.38 - - [02/Aug/2024:01:35:56 +0000] "GET / HTTP/1.0" 200 625 "-" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/96.0.4664.110 Safari/537.36" "-"-n 指定命名空间$ kubectl get pods -n aps NAME READY STATUS RESTARTS AGE aps-api-entry-9c74586db-7djmp 1/1 Running 0 11d aps-config-server-544bb7677c-nwr6x 1/1 Running 0 5d22h aps-data-etl-649b79cb79-mbwr7 1/1 Running 0 46m重启应用$ kubectl rollout restart deployment aps-data-etl -n aps deployment.apps/aps-data-etl restarted -